IP Networking: Adjusting the Send Socket Buffer and the TCP Receive Window Size (RWND) by the Bandwidth Delay Product (BDP) for Maximum TCP Throughput

Posted on October 25, 2021 — 9 Minutes Read

There was a rather thoroughly written Request for Comment (RFC) released by the Internet Engineering Task Force (IETF), 10 years ago back in 2011, on the negative correlation between the maximum TCP throughput and the round-trip time (RTT) over an IP network (RFC 6349). It suggests, wth reference to the Bandwidth-Delay Product (BDP) that constitutes the in-flight capacity of a data path, the socket buffer size required at both ends of the transmission to achieve maximum TCP throughput (RFC 1072). Still, to this date, where high-bandwidth networks, as a result of an ever-improving internet on a fibre backbone, and the rising popularity of 5G that operates on higher frequency bands, that altogether yields networks of 100Mbps+ upstream and downstream with or without wires, are the norm, the crippling effect of RTT on TCP throughput is still often neglected.

TCP and Congestion Control

Despite the mistreatment, the negative effect of RTT on TCP throughput is in fact rather intuitive and straightforward. Unlike its counterpart the User Datagram Protocol (UDP) (RFC 768), the Transmission Control Protocol (TCP) (RFC 793), in the transport layer of the TCP/IP stack, is connection-oriented in the sense that a connection by handshaking needs to be established before data transmission. It is designed for reliable IP packet delivery, and has as such embedded mechanisms for delivery acknowledgment, as well as packet transmission and congestion control in the event of packet loss. Since reliable delivery by acknowledgment is a feature of TCP, and the only tool for TCP to avoid congestion is to limit the number of in-flight and unacknowledged packets, this number, that is, the window size of the in-flight data packet allowed is as such a crucial determinant of the maximum throughput of any TCP session. For a 10Gbps network path of 100ms latency for example, if for reason unknown both the sender and receiver allow for only a size of 64 KB (Kilobytes) of in-flight packet in a single TCP session, the maximum throughput for this session will never exceed 64 KB x 8 bits divided by 0.100 second = 5.12 Mbps. Notwithstanding that there may be unused capacity in the data path, the sender is nonetheless not allowed to send any more data packet, nor the received allowed to receive any, if 64 KB of them are in-flight and unacknowledged.

Send Socket Buffer and Receive Window (RWND)

This mechanism is well understood and is the reason that during the TCP connection handshaking phase, the sender communicates with the receiver of its congestion window size (CWND) and the receiver of its receive window (RWND), for the precise purpose of flow control should it be needed during the transmission. Thoroughly elaborated in the previously mentioned RFC 6349, the CWND is a dynamic window, derived from the kernel- or application- enforced send buffer size of the network socket at the sender endpoint of the TCP session. It will allow the sender to limit the amount of in-flight and unacknowledged packet during a TCP transmission, shall it detect packet loss by a lack of delivery acknowledgement at a predefined interval after the transmission. This mechanism is a crucial part of the congestion avoidance algorithm, that offers real-time flow control, after a slow start and a subsequent, gradual increase of the amount of unacknowledged packet injected into the network path. The RWND on the other hand, derived similarly from the receive buffer size of the network socket at the receiver endpoint, informs the sender the number of bytes the receiver is capable of accepting at a given time. With knowledge of both the CWND and RWND, the sender will take the lower of the two as the window size of the data packets it will allow in-flight without delivery acknowledgment. It should then be apparent that to allow a TCP session to attain the maximum throughput permitted by its available bandwidth, its send socket buffer and the RWND must be of a value of higher than or at least on par of the in-flight capacity of a data path i.e. the BDP, for otherwise the maximum TCP throughput will be limited by the buffer and window sizes instead of the Bottleneck Bandwidth (BB) of the data path. In short, it comes down to three formulae.

- BDP (bits) = RTT (second) x BB (bps)

- Minimum TCP Send Socket Buffer and RWND (bytes) = BDP (bits) / 8 bits

- Theoretical Maximum TCP Throughput = TCP RWND (bytes) x 8 bits / RTT (second)

iPerf and Traffic Control

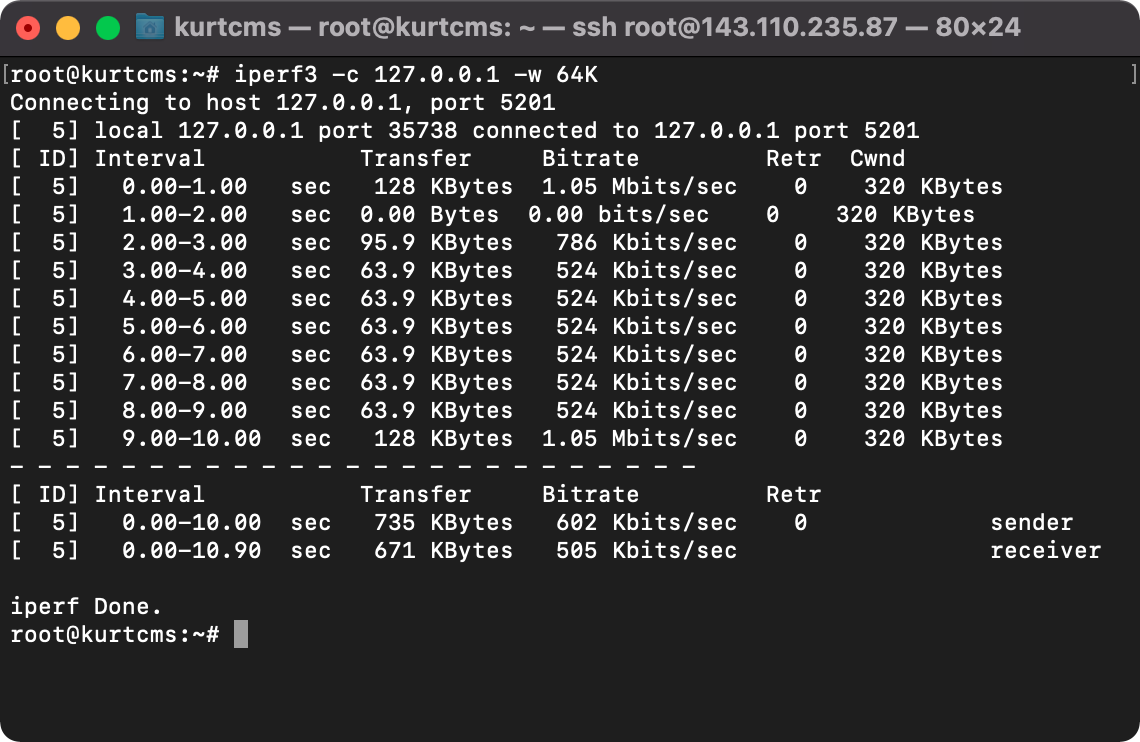

With this in mind, most applications that tap into TCP for data transmission allow for the enforcement of a custom value for both the send socket buffer and the RWND respectively at the client and server sides for performance tuning. For example setting these on the industry-norm network evaluation tool, iPerf, was as simple as supplying an option of -w or --window followed by desired send socket buffer and RWND size.

iperf3 -c *server-ip-address* -w *size-in-kilobytes-or-megabytes*

Demonstrating the negative effect of RTT on the TCP throughput with iPerf is rather straightforward. Taking advantage of the facts that a host is reachable from itself at the localhost address of 127.0.0.1 on the loopback network interface, and that the Linux kernel comes with the tc tool to enforce traffic control, latency can be added to the loopback interface, before a host establishes an iPerf session to itself at the localhost address on the latency-added loopback path, with a specific send socket buffer and RWND size, to demonstrate the drop in throughput as RTT increase.

tc qdisc add dev lo root netem delay *single-trip-delay-to-add-to-the-path*

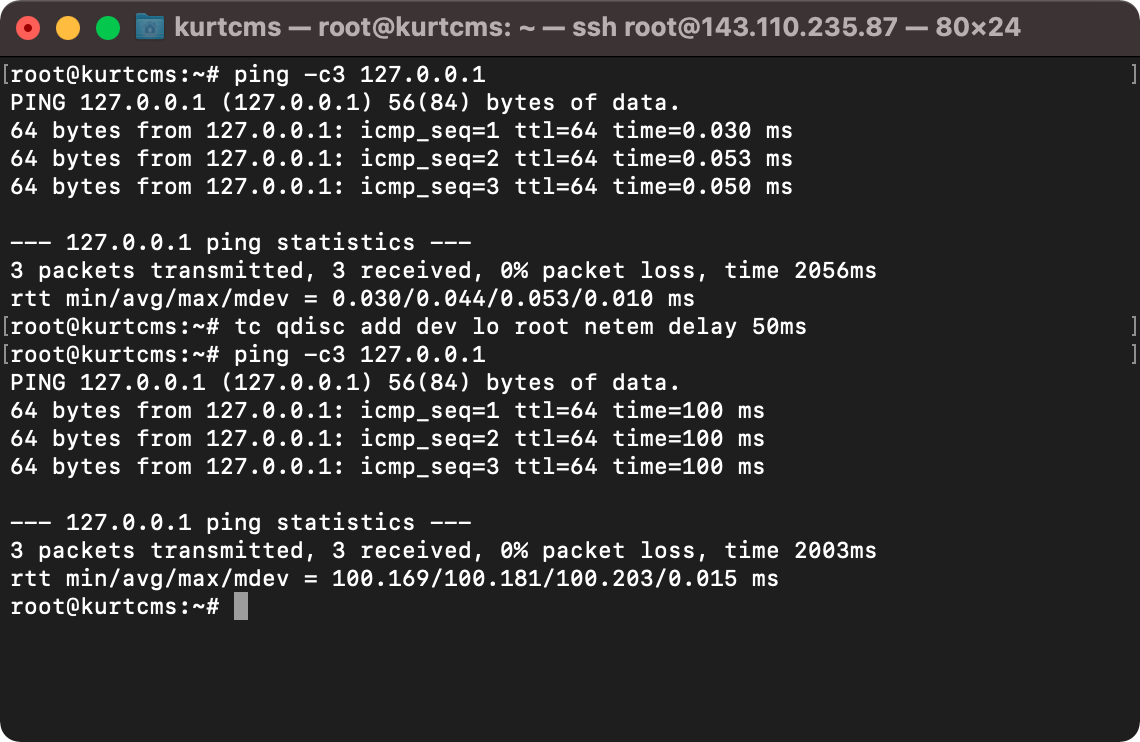

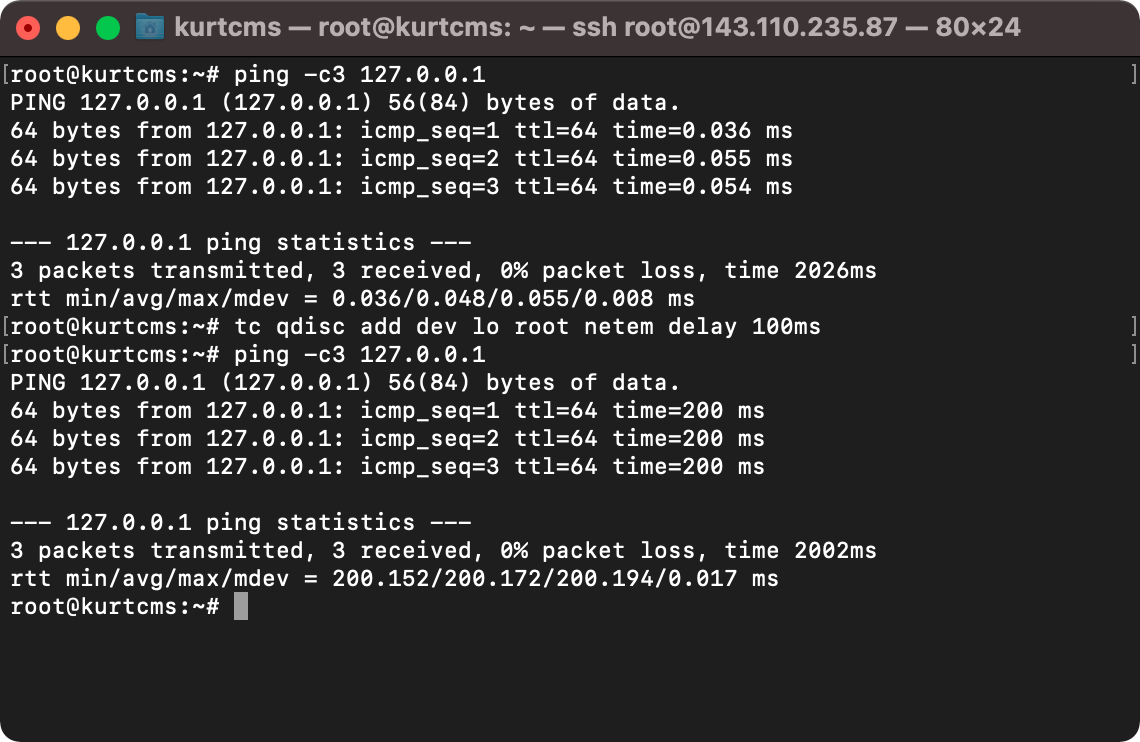

| Traffic control with tc on the loopback interface for a RTT of 100ms | Traffic control with tc on the loopback interface for a RTT of 200ms |

|

|

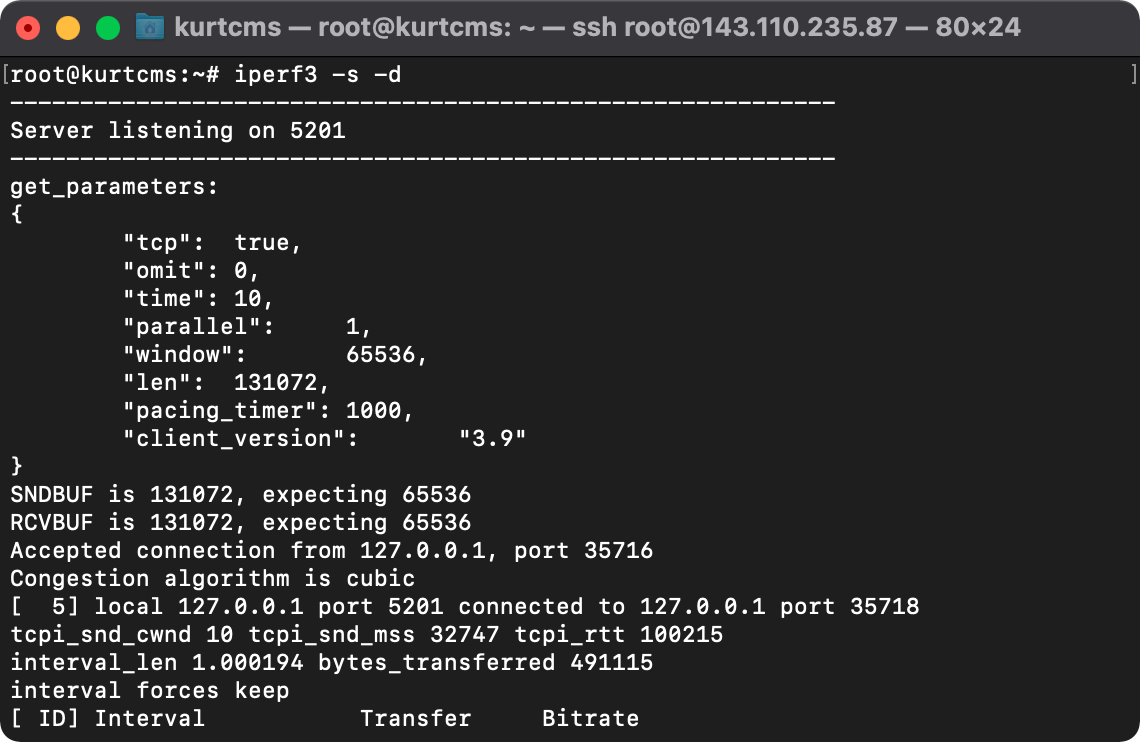

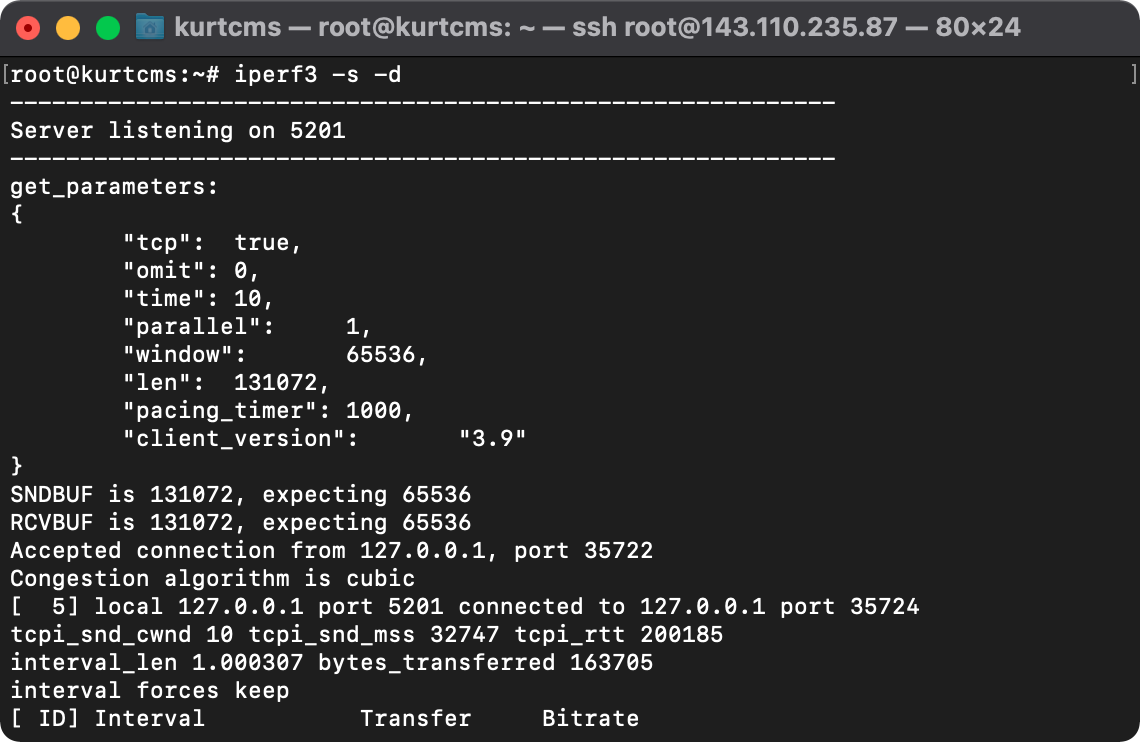

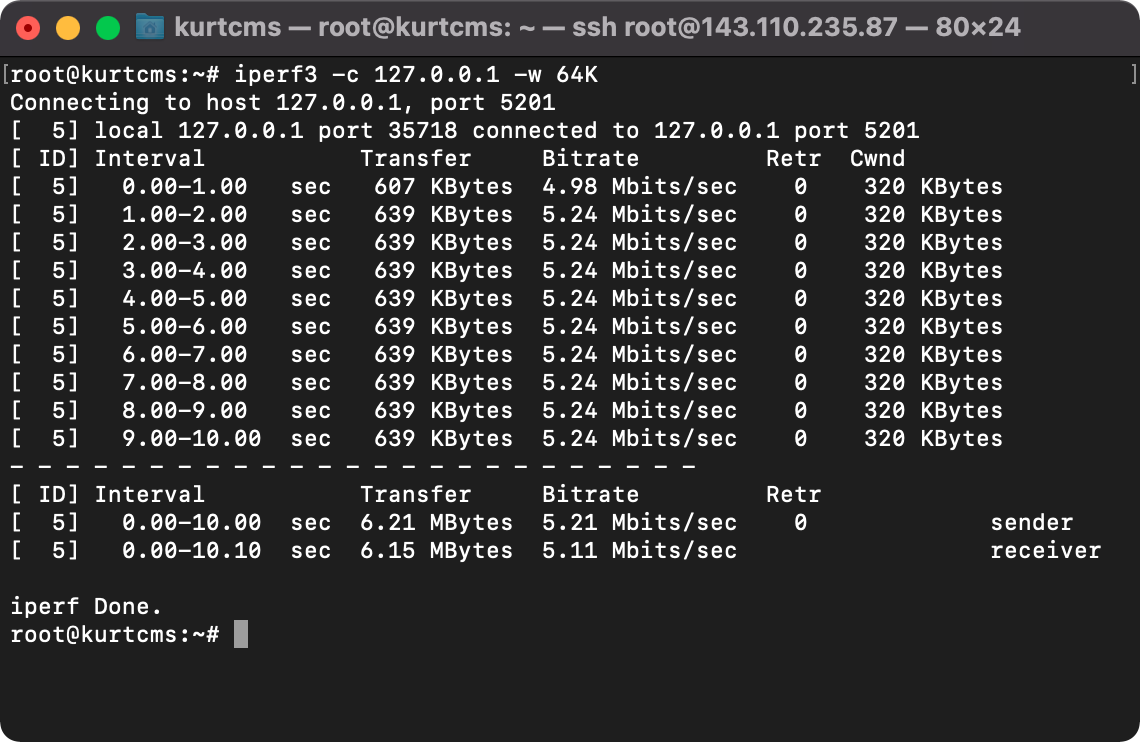

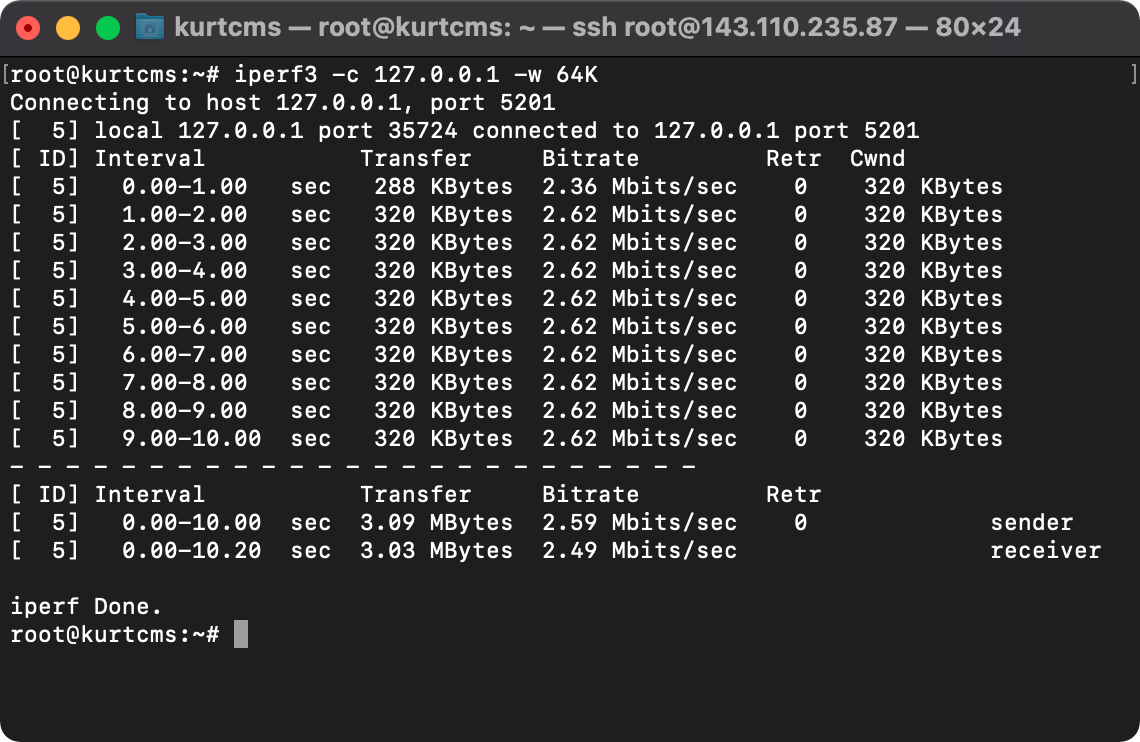

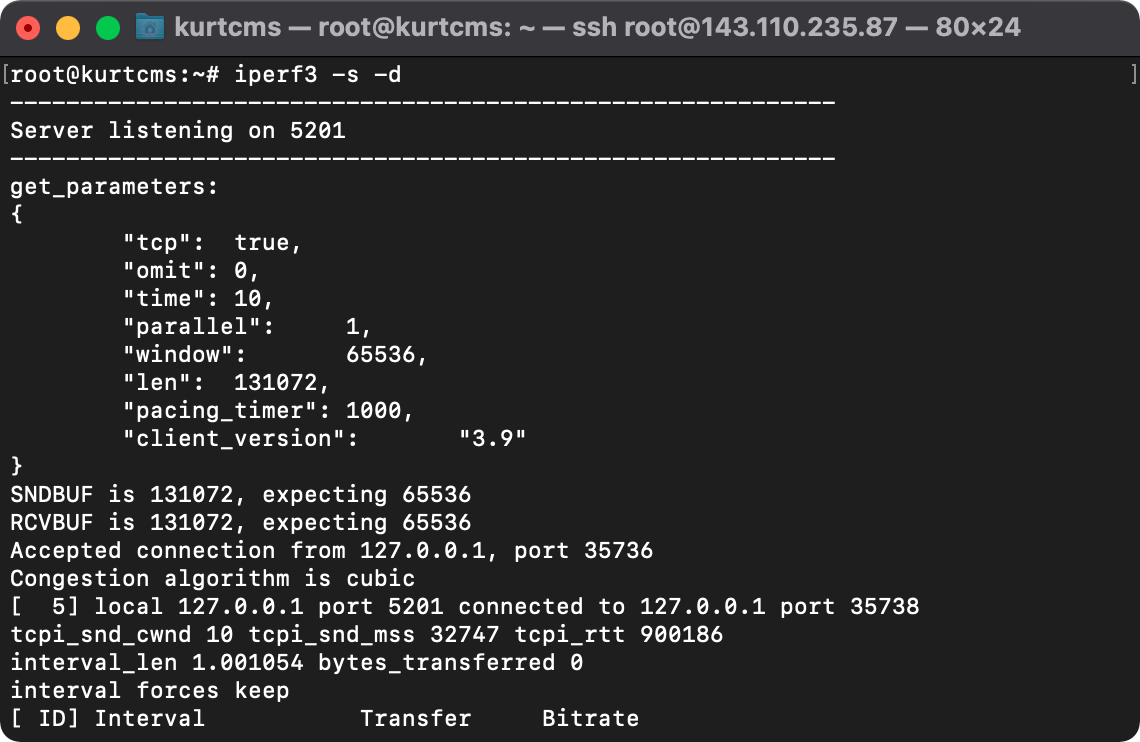

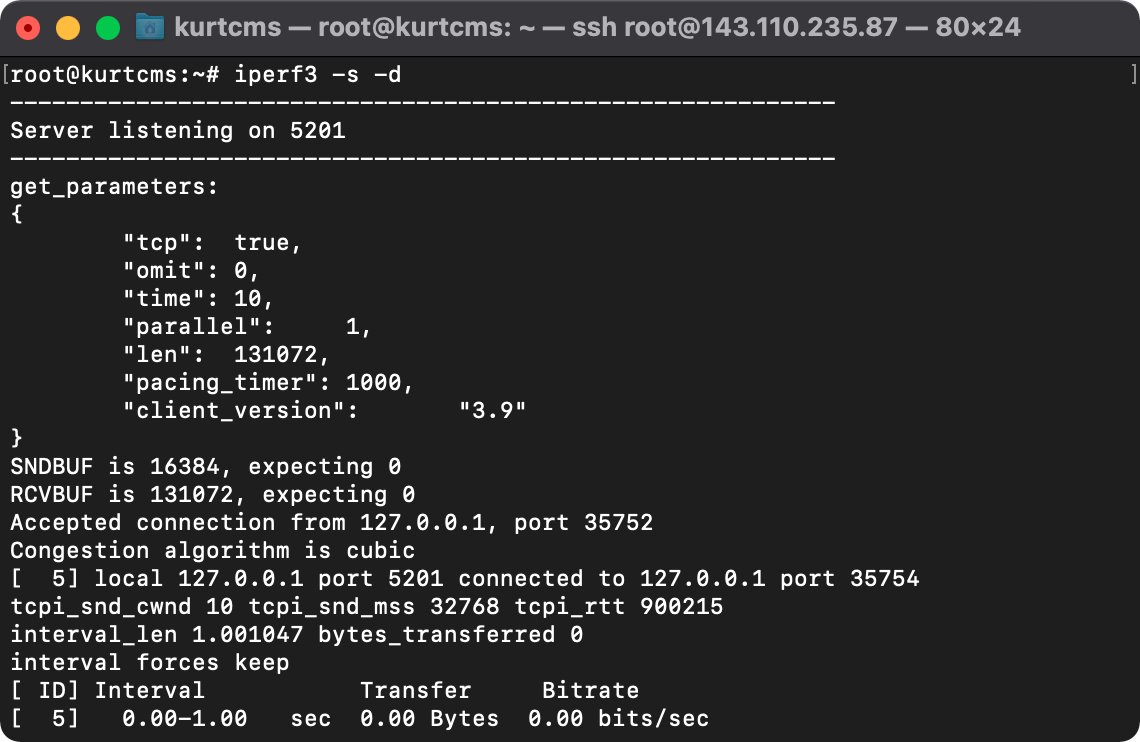

| iPerf3 server receiving a request of a TCP RWND size of 64 KB | iPerf3 server receiving a request of a TCP RWND size of 64 KB |

|

|

| Throughput of around 5.16 Mbps in a single direction which is in line with the theoretical maximum throughput of 5.24 Mbps given the RTT and TCP RWND (64 KB x 8 bits / 0.1 second) | Throughput of around 2.55 Mbps in a single direction which is in line with the theoretical maximum throughput of 2.62 Mbps given the RTT and TCP RWND (64 KB x 8 bits / 0.2 second) |

|

|

As it should be apparent, as the RTT on the data path increase, to ensure maximum TCP throughput, the send socket buffer and RWND will need to increase as well.

TCP Window Scaling

Then came another problem. When the TCP was first drafted 40 years ago in 1981 in the RFC 793, the TCP header value allocated for the window size was merely two bytes long, which meant it could only hold a value of up to 2 to the power of (2 x 8 bits) = 65,536 bytes that is 64 KB. Truth be told, 40 years ago, no one could have envisioned the popularity and adoption of TCP today, not to mention the size and scale and the massive bandwidth of the networks that it is used on these days. Two bytes in the TCP header for a window size of up to 64 KB was considered more than adequate back at the time. With a maximum TCP window size of 64 KB however, on a VSAT internet that bounces IP packet to a satellite in geostationary orbit (GEO) at 35 thousand kilometres+ above the earth and back, the RTT could easily go up to 900ms, the theoretical maximum TCP throughput as such goes down to 64 KB x 8 bits divided by 0.900 second = 0.58 Mbps, which is not even one-tenth of the VSAT bandwidth offering in the market or what one would normally expect of a functional internet connection.

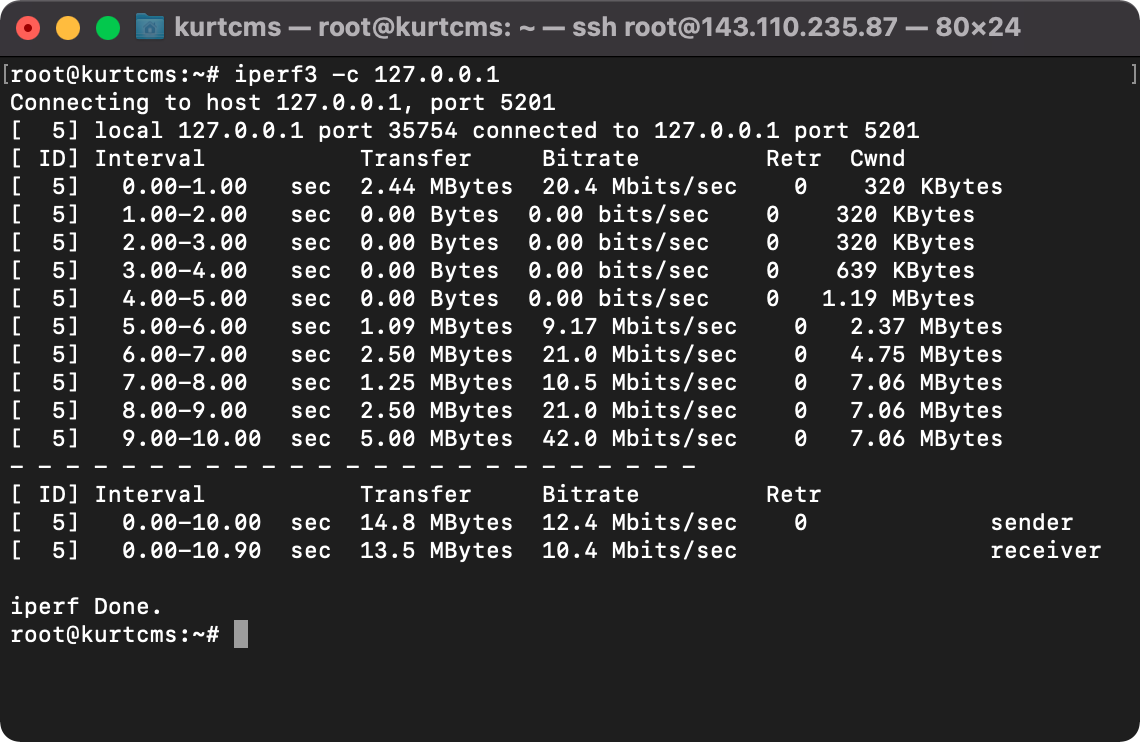

While the allocated size in the TCP header for the window value could not be changed without affecting the entire segment structure, the modularity of its design permits nonetheless extensions such as TCP window scaling (RFC 7323) that allows the TCP window to scale beyond the limit of 64 KB without expanding its allocated 2-byte size field. This is done by allowing the sender and receiver during the session handshaking phase, communicate a TCP window scale option with a maximum scale factor of 14 for a scaled maximum TCP window of 64 KB x (2 to the power of 14) = 1 Gigabyte, which on a VSAT with a RTT of 900ms would allow for a theoretical maximum TCP throughput of 1 Gigabyte x 8 bits divided by 0.900 second = 9.3 Mbps that is in more in line with what one would expect. Demonstrating the effect of TCP window scaling with iPerf is rather straightforward as well with the sysctl tool to modify the kernel parameter of TCP window scaling option.

sysctl -w net.ipv4.tcp_window_scaling=*<0 or 1>*

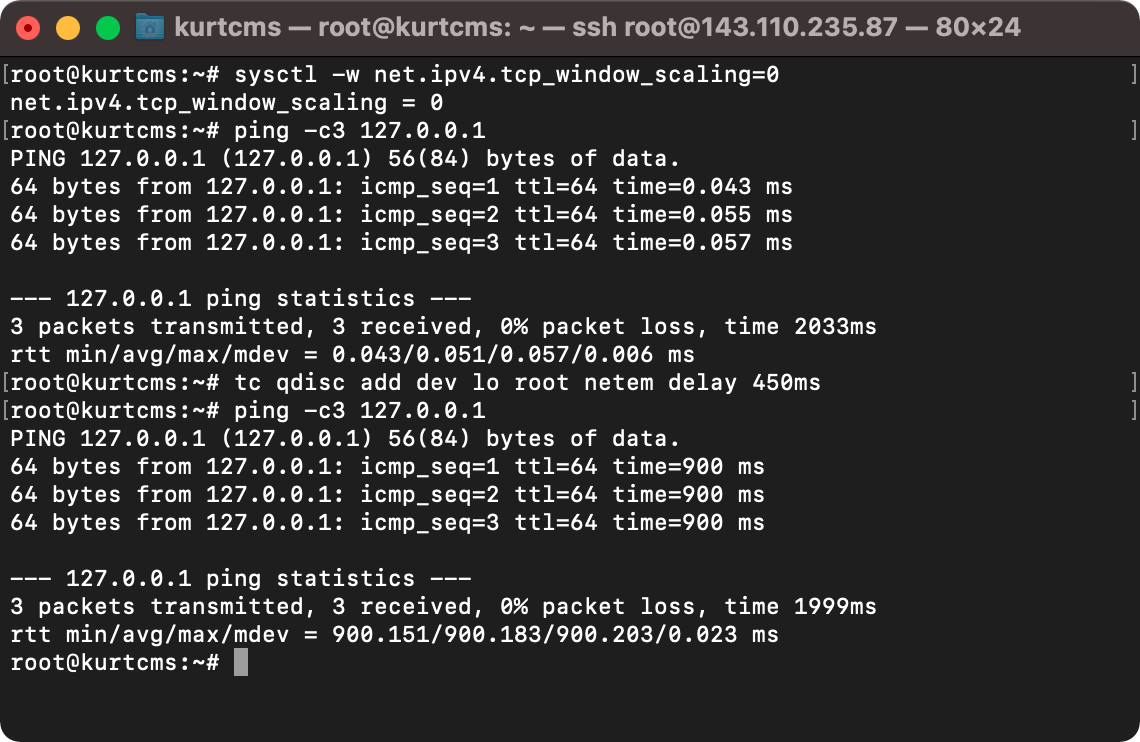

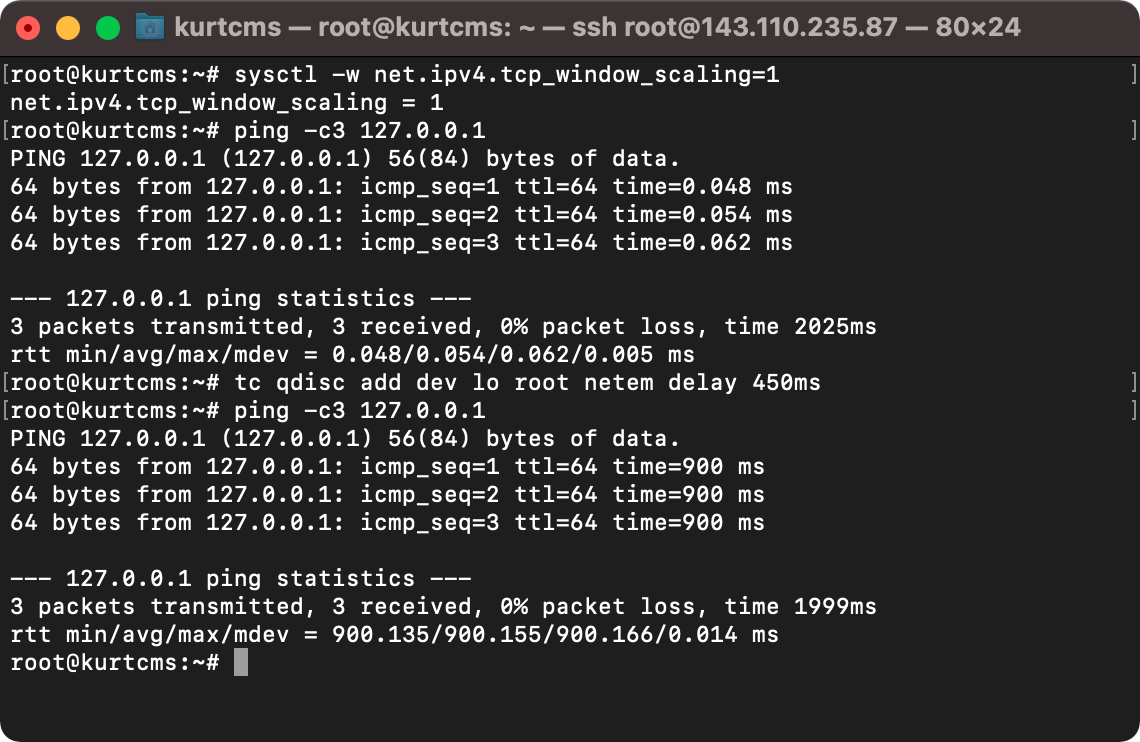

| TCP window scaling disabled with sysctl and traffic control with tc on the loopback interface for a RTT of 900ms | TCP window scaling enabled with sysctl and traffic control with tc on the loopback interface for a RTT of 900ms |

|

|

| iPerf3 server receiving a request of a TCP RWND size of 64 KB | iPerf3 server receiving no request for a fixed TCP RWND size |

|

|

| Throughput of around 0.55 Mbps in a single direction which is in line with the theoretical maximum throughput of 0.58 Mbps given the RTT and TCP RWND (64 KB x 8 bits / 0.9 second) | Throughput of 40 Mbps+ in a single direction towards the end of the session with the TCP window scaling beyond the theoretical maximum of 64 KB |

|

|

Thoughts

As marvellous as the modularity of TCP is, there comes a time when extensions may pile more technical debts than help and sadly the time is about now. Early this year in 2021 after years of deliberation among the Working Group, IETF released the first batch of RFC on QUIC (RFC 9000) which builds on the connection-less UDP in the transport layer, and implements congestion control and loss recovery, as well as Transport Layer Security (TLS) and connection multiplexing at a high level, crossing multiple layers, that aims to capture most of the value provided by TCP and HTTP/2 (RFC 7540) that the modern internet requires. 40 years is an aeon in technology, with the birth of QUIC, there is perhaps no better time for TCP to take a back seat and hang up its boots.