IP Networking: Technical and Commercial Evaluation of a Software-Defined Network (SDN) for a Managed SD-WAN Service

Posted on March 1, 2021 — 40 Minutes Read

One time I was in charge of a product development project that involves expanding the service coverage of our Managed Software-Defined Wide Area Network (SD-WAN) Service beyond the territories in which we had a tried and trusted, in-operation and of-scale Multi-Protocol Label Switching (MPLS, RFC 3031) IPVPN, to regions where our end customers were expanding or migrating their business into and where unfortunately we did not yet operate a network. Expanding network coverage into regions without an existing MPLS IPVPN meant for one that there is no in-operation and service-level assured network as a middle-mile backbone upon which our Managed SD-WAN Service could be built. Given the existing network design that premised on the decoupling of the first- and last- mile connection which in most case would be the existing off-the-shelf internet that our end customer had at their premises, from the middle-mile backbone network which was our MPLS IPVPN at the time, this posed a design challenge that demanded a bit of rethinking. Details of the various approaches to this challenge and the commercial and technical evaluations relevant to one approach will be explored in the discussion that follows, with confidential and sensitive information redacted.

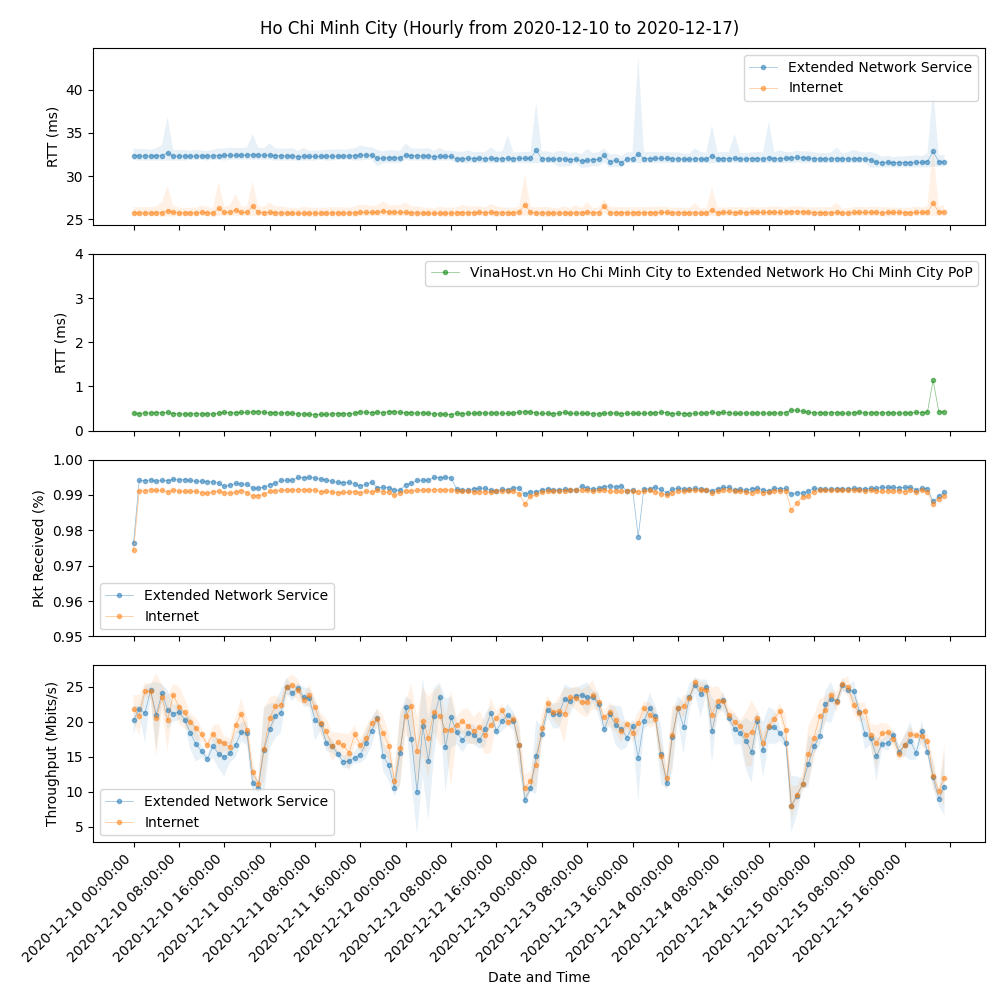

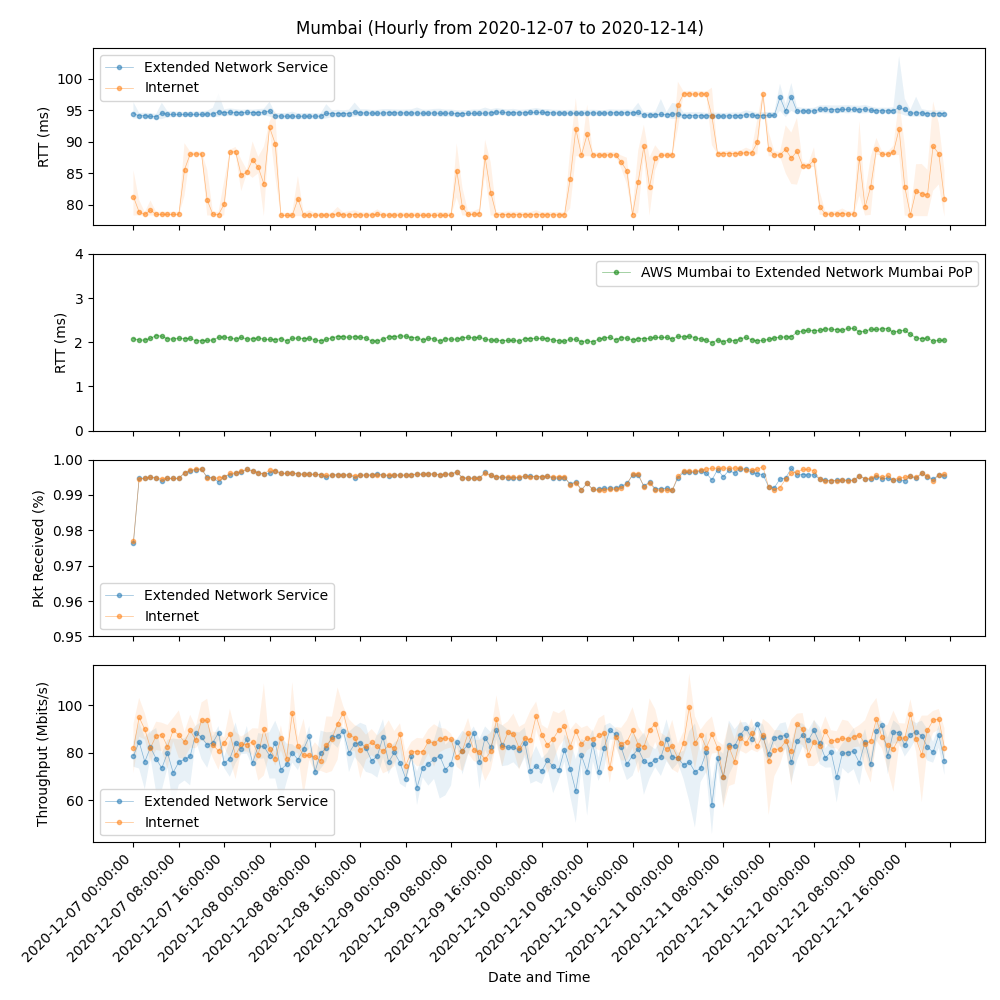

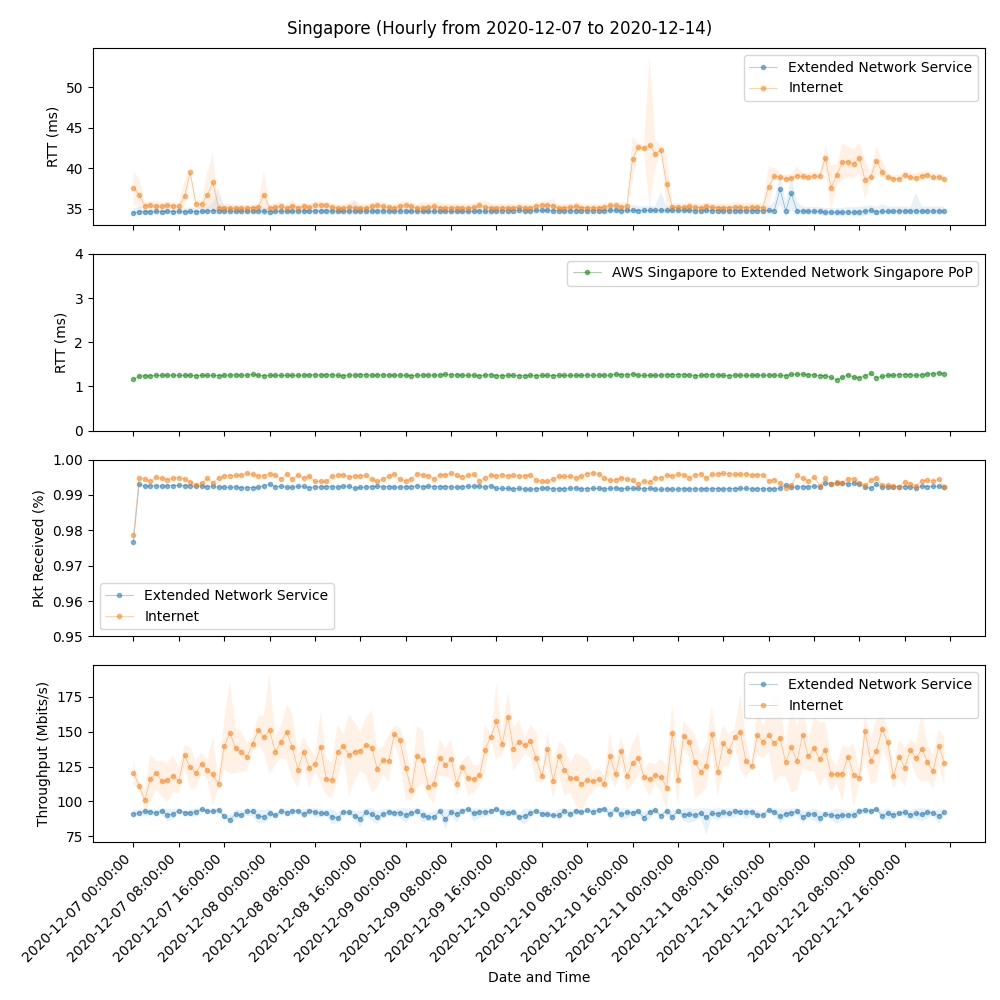

In short, a technical evaluation from December 7th to 14th for Mumbai and Singapore and from December 10th to 17th for Ho Chi Minh City revealed that with an extended network:

- From Ho Chi Minh City to Hong Kong, jitter drops by as much as 69% from 5.01ms to 1.66ms while latency increased by 22% from 26.30ms to 32.10m over direct internet with packet loss holding steady at 0.79%.

- From Mumbai to Hong Kong, jitter drops by as much as 76% from 5.87ms to 1.42ms while latency increased by 14% from 82.97ms to 94.58ms over direct internet with packet loss holding steady at 0.5%.

- From Singapore to Hong Kong, jitter drops by as much as 53% from 2.53ms to 1.19ms compared to with direct internet while latency and packet loss are holding steady at 35ms and 0.5-0.7% respectively.

An extended network was as such a viable option for expanding the network coverage of our Managed SD-WAN Service into regions without an existing MPLS IPVPN.

The off-the-shelf domestic internet in Vietnam and India on-premise the targeted or existing SD-WAN customer base is often of much lesser quality and a more erratic nature than the direct internet baseline in the evaluation which would further strengthen the benefits of an extended network as part of a middle-mile backbone.

The rest of the code is shared on Github for reference and further development.

Background

Business Expansion and Relocation

In light of the increasing pace of globalisation and of Hong Kong enterprises expanding business operation to the rest of the world, of which the most notable was the relocation of manufacturing bases to southeast Asia such as Vietnam, Cambodia and India etc. either on cost consideration or as a result of the heightening US-China tensions, that constituted a notable existing and targeted SD-WAN customer base; of utmost importance to our Managed SD-WAN Service was to expand its overseas SD-WAN service coverage with an overseas middle-mile network that provided a service level adequate for business use, at a cost that would allow for a reasonable profit margin per customer for their overall SD-WAN networks in Hong Kong and overseas, and that might be provisioned on-demand by operating expenses (OPEX) without the need of extra capital expenditure (CAPEX) or additional physical hardware or network resources which would prove to be difficult to budget or mange for our principal business operation was in Hong Kong.

Design Principle and Architecture

Given the existing network design that premised on the decoupling of the first- and last- mile connection which in most case would be the existing off-the-shelf internet that our end customer had at their premises, from the middle-mile backbone network which was our MPLS IPVPN at the time, this posed a design challenge that first and foremost required an in-depth understanding the reason for a network design that commanded a middle-mile network in the first place.

The internet is without doubt a modern wonder, and with its ever decreasing cost and rapidly rising bandwidth and reliability, it was proving to be a viable business network option in certain regions. Indeed this rise of the internet was one of the major drivers behind SD-WAN which having evolved from its predecessor, Software Defined Network (SDN), was adapted to harness the cost-effectiveness and the increasing reliability of the internet to create a new kind of business network, a Software-Defined Wide Area Network as it was called, that provided a service performance and reliability on par with a traditional lease-line business network at yet only a fraction of the cost. While this sounded wonderful in theory, and perhaps in reality in some regions, for most of the world however, the network infrastructure on which the internet was built, whether within a national border or across nations, introduced complexity to this picture.

For off-the-shelf internet where service performance and level were often not a part of the contractual commitment from the telecommunications operators to the end users, an operator often based its traffic routing decision, especially for international traffic that it has to hand over to the network infrastructure constructed and owned by another operator overseas, to which it may have to pay for the IP transit, on cost rather than performance consideration. For end users this meant that while an off-the-shelf internet might deliver a reasonable performance within a national border, its bandwidth and reliability which is often measured in terms of latency, jitter that is the fluctuation in latency over time, and packet loss, could increase multiple folds to a point where it was beyond acceptable for critical business applications.

This performance degradation of off-the-shelf internet crossing a national border and the erratic nature of its performance overall was the precise reason for a network design that leveraged a middle-mile backbone network. It allowed network traffic to be transmitted over cost-effective internet from the SD-WAN Edge Customer Premise Equipment (CPE) commissioned at the customer premises to the SD-WAN Gateway Provider Edge (PE) at our in-country MPLS IPVPN Point of Presence (PoP) which constituted the first-mile, and from there onward to be carried across the national borders on our service-level assured MPLS IPVPN, as the middle-mile backbone network, to another SD-WAN Gateway PE at yet another MPLS IPVPN PoP in the same region of the traffic destination, before delivering the network traffic to the SD-WAN Edge CPE at its final destination over the cost-effective internet in the region of the destination that constituted the last-mile of the journey.

The Overseas Middle-mile Network Dilemma

Knowing the importance of having a service-level assured network as a middle-mile backbone in the existing network design, when presented with a challenge to expand the service coverage of the Managed SD-WAN Service beyond regions with existing MPLS IPVPN PoP, apart from the additional managed service arrangement that provided service delivery, on-site service commissioning for the SD-WAN Edge CPE as well as customer and network operation services in the regions to be expanded into that are nonetheless beyond the scope of the present discussion, for network coverage most natural of a solution to a telecommunications veteran was to locate the incumbent telecommunications operators in these regions and negotiate with them a Network-to-Network Interconnection (NNI, RFC 6215) on either a bilateral IP peering or payment-based IP transit partnership, that would allow both operators to tap into the existing MPLS IPVPN PoP of the other partner to construct SD-WAN Gateway PE and to increase network coverage. A viable option for sure this was, the problem was the time and effort that were required to establish the technical and commercial discussions and to arrive on an agreement that, by experience, could span from months to years on end, not to mention yet another approval process for the CAPEX investment needed as per the resulting agreement for both MPLS IPVPN if additional routing equipment is needed, and SD-WAN which would for sure require additional hardware server for operating SD-WAN Gateway PE to accept and terminate the network traffic from SD-WAN Edge CPE at the customer premise before carrying the network traffic across on the MPLS IPVPN. Crucial to any fruitful commercial partnership also was the projected business that could be harvested as a result of the investment by CAPEX or otherwise, which considering the novelty of and the relative uncertainties with the direction and growth of a evolving technology such as SD-WAN, any business projection would be difficult if not entirely impossible, and the resulting business case built upon the business projection would only be as shaky as its foundation.

An Extended Network for Overseas

An alternative approach that would allow for eliminating or limiting the amount of CAPEX in light of the difficulty of business forecasting or of uncertainty with the projected business growth while still enabling the network coverage of our Managed SD-WAN Service to expand into regions with no coverage would be a service and partnership model that in a way resembled much of the SD-WAN technology itself as a best-of-both-worlds. This envisioned partnership model premised on commissioning on-demand, by OPEX, an existing third-party network service of some kind as an extended network, that would, with a reasonable service level, carry network traffic of any kind on any protocol and port, to and from the customer premises in regions that were beyond reach of our MPLS IPVPN, to regions where we had MPLS IPVPN PoP, without needing additional hardware equipment that is additional CAPEX. Network traffic once received at the SD-WAN Gateway PE at our MPLS IPVPN PoP would be carried across national borders to its destination as per the existing architecture and design in a way that the third-party extended network would in essence be a plug-and-play and pay-per-Mbps network extension in regions where the business and the forecast did not yet justify, cost- and time- wise for establishing a new NNI partnership with the incumbent operators and operating additional SD-WAN Gateway PE at their MPLS IP VPN PoP.

| MPLS IPVPN | Existing On-premise Internet | Extended Network | |

|---|---|---|---|

| CAPEX | Extra CAPEX for SD-WAN Gateway hardware resources | No Extra CAPEX (Existing On-premise Internet) | No CAPEX |

| OPEX | Extra per-Mbps and marginal on-net cost at a relatively lower rate | No Extra OPEX (Existing On-premise Internet) | Extra per-Mbps and marginal on-net cost at a relatively higher rate |

| Cross-border Performance | Service level assured | Best-effort | Service level assured |

| Ownership and Controllability | High controllability | No controllability | Controllable by the extended network provider |

| Fit for Business Use | Yes | No | Yes |

Extended or acceleration networks of this kind despite being still a relatively minute marketplace were not unheard of at all as it turned out. Examples of this type of network services included the Magic Transit by Cloudflare which with built-in DDoS mitigation had a distinctive security flavour, and the IP Application Accelerator by Akamai which was on other hand geared towards the gaming market due to its network architecture and vertical market focus for example. A few of these extended or acceleration networks were inquired and explored in a number of internal and external discussions, eventually a selected few were shortlisted for in-depth commercial discussion and technical evaluation to determine their price performance compared to each other as well as to the alternative scenario in which an NNI partnership is established and SD-WAN Gateway PE operated with an incumbent telecommunications operator in the region of interest, that would provide a measure of their suitability as an extension network in the mean time to our Managed SD-WAN Service.

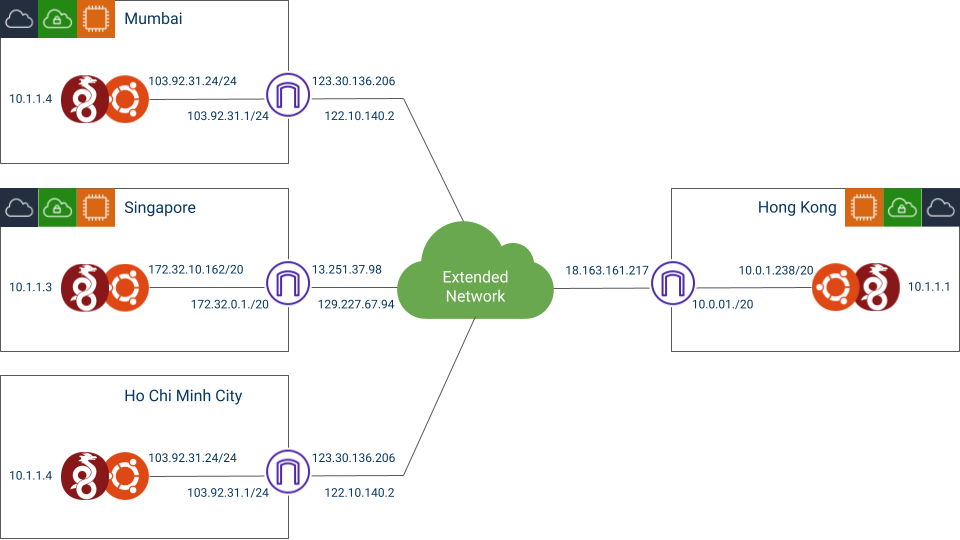

An Extended Network for Southeast Asia

For Vietnam, Cambodia and India where there will be immediate overseas SD-WAN service demand for reasons previously discussed, our Hong Kong SD-WAN Gateway PE with a MPLS IPVPN middle-mile backbone network would be the primary SD-WAN Gateway PE, between which and the customer premises, the extended or acceleration network would carry traffic. The extended or acceleration network of choice was to have PoP in these countries to reduce the first- and last- mile network distance on the off-the-shelf on-premise internet as much as possible and to ensure that the first- and last- mile stayed within the national border for international bandwidth was more scarce and expensive than domestic bandwidth, that there was often a drastic difference between network performance within the national border and across a border, as previously discussed. Per-Mbps OPEX cost of the acceleration network shall allow for a reasonable profit margin per customer for their overall SD-WAN network in Hong Kong and overseas, and with a network performance fitting for business use ideally on par with MPLS IPVPN. Commercial and technical evaluation will be the discussion that follows.

Evaluation

Commercial

The commercial evaluation was rather straightforward, with the projected CAPEX cost of the additional hardware equipment and server as well as the per-Mbps OPEX costs of establishing an NNI partnership and operating SD-WAN Gateway PE as the baseline, over which an extended or acceleration network will for sure have cost advantage given the absence of CAPEX investment. Yet a dollar paid is a dollar cost. One would expect that up to a certain network usage, the benefit of having a lower per-Mbps OPEX cost by paying a hefty CAPEX upfront would come home and rendered the total cost of an NNI partnership with SD-WAN Gateway PE lower than that of commissioning a third-party network service, even taking into full account of the depreciation and amortisation of the additional hardware equipment and server with the projected cash flow discount by a required internal rate of return. It came down to a matter of when and where that breakeven point would be for the extended or acceleration network of choice.

Same approach went for the technical evaluation with the projected or committed service level and performance of the MPLS IPVPN by the NNI partner in the expansion region as a baseline, that an extended or acceleration network would have to meet or even outperform. Most of the extended or acceleration networks explored came with a service availability guarantee e.g. 99.99% and a 24×7 network operating centre (NOC) for proactive network monitoring, since their networks were often built on a mix of private backbones and public internet however, there was as such no PoP-to-PoP performance guarantee on latency, jitter and packet loss, that were nonetheless crucial for a comparison to the baseline over which they had to beat or meet, and a head-to-head comparison between them for a price performance ranking needed to render selection decision. For that reason, a real-world evaluation would need to be commissioned on the shortlisted networks for an estimate of their performances. Details of the technical evaluation for one particular acceleration network will be explored in the discussion that follows with confidential and sensitive information redacted of course. The rest of the shortlisted networks followed the same evaluation with some minor adaptations permitted fitting the characteristics of the particular network under evaluation that would still retain the evaluation results of full comparability with the rest.

Purpose

The purpose of this technical evaluation is to assess whether the acceleration network of interest is a fitting network service as an extended network to our Managed SD-WAN Service for overseas service coverage, particularly in Vietnam, Cambodia and India. For the existing Hong Kong SD-WAN Gateway PE will be the primary SD-WAN Gateway PE between which and the customer premises, the acceleration network of interest, if selected as an extended network to our Managed SD-WAN Service, will carry traffic, network performance between these countries and Hong Kong will be evaluated.

Ho Chi Minh City was where the acceleration network of interest operates in Vietnam, and Mumbai in India. Cambodia was still out of reach for the acceleration network of interest, Singapore as the nearest PoP to Cambodia in terms of network distance as such would be evaluated instead, notwithstanding that the first- and last- mile performance between Cambodia and Singapore is beyond comprehension in this evaluation.

| Country | Vietnam | Cambodia | India |

|---|---|---|---|

| Extended Network PoP | Ho Chi Minh City | Singapore | Mumbai |

Resources and Specifications

Evaluation was planned from December 7th to 14th for a round-the-clock and round-the-week performance measurement. Amazon Web Services Elastic Computing (AWS EC2) Virtual Private Cloud (VPC) with on-demand compute capacity, tried and trusted internet resources, and service availability precisely in Mumbai, Singapore and Hong Kong where the PoPs of the acceleration network of interest are, are the perfect compute resources for this evaluation. In Vietnam whereas AWS EC2 VPC does not operate, another cloud compute resource provider has to be searched and tested, which turned out to be a rather bumpy ride that eventually delayed the evaluation start date for Vietnam for three days from December 7th to 10th despite the plan was to start the evaluation for all three regions on the same date.

Details of the troublesome journey of finding a fitting cloud compute resource provider in Ho Chi Minh City for the evaluation is documented in the appendix, a proper cloud compute resource provider was eventually found, and other than delaying the evaluate start date for Vietnam from December 7th to 10th, there is no lasting material impact on the evaluation results. The details included in the appendix are intended for reference in case a closer study in the future is in order. At first glance the issue encountered might hint of a problematic internet infrastructure in Vietnam which might hinder the SD-WAN adaptation as a whole or in a more positive light might point to what our Managed SD-WAN Service would need to overcome in order to out-compete the rest in this specific market.

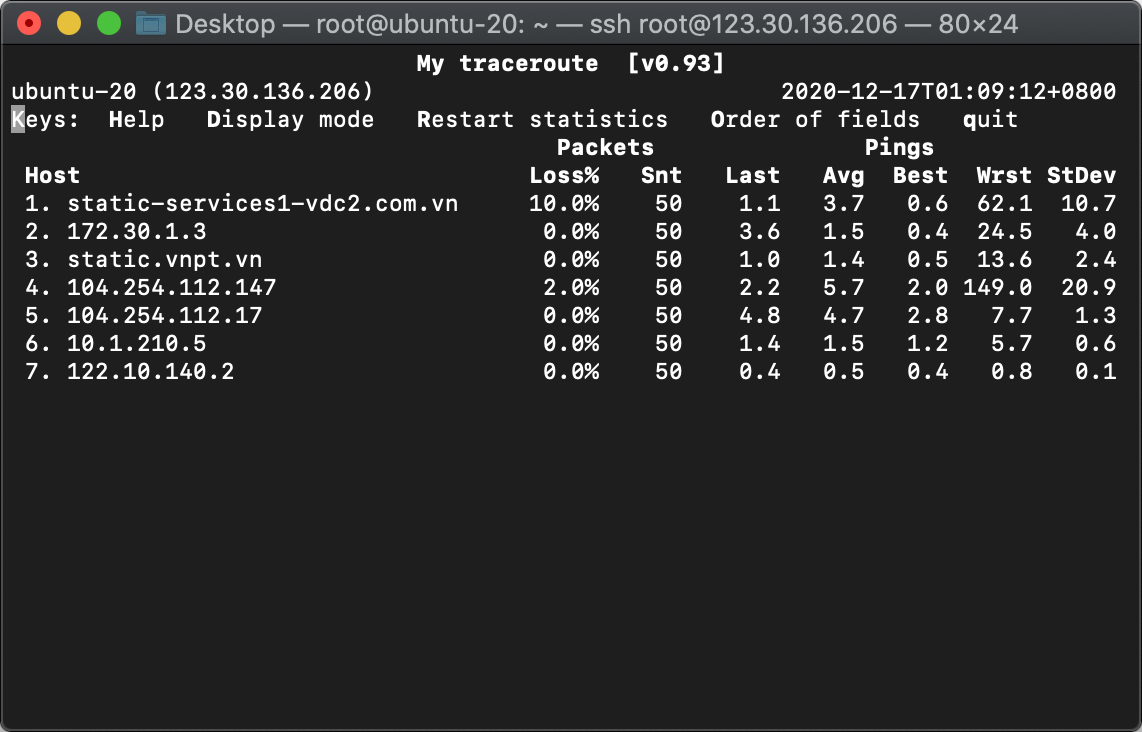

VinaHost Vietnam with on-demand Virtual Private Server (VPS) offering was found to be a proper cloud compute resource provider for the evaluation. Its VPS is housed in the VDC2 NTMK data centre operated by the Vietnam Datacommunication Company, a member of the state-owned Vietnam Posts and Telecommunications Group (VNPT) in Ho Chi Minh City where precisely the acceleration network to be evaluated has a PoP at, and with internet resources provided by VNPT as well.

Given the immediate SD-WAN service demand in Vietnam, Cambodia and India is projected to be from 10Mbps to 100Mbps, AWS EC2 VPC of a minimum compute capacity capable of at least 100Mbps bandwidth, and VinHost VPS of up to 100Mbps throughput are commissioned for the evaluation. Detailed specifications are listed below for reference.

| Evaluation Date | Dec 10th to Dec 17th | Dec 7th to 14th | ||

|---|---|---|---|---|

| Region | Vietnam | Cambodia | India | Hong Kong |

| Extended Network PoP | Ho Chi Minh City | Singapore | Mumbai | Hong Kong |

| Compute Resources Provider | VinaHost Vietnam in VDC2 NTMK | AWS Singapore | AWS India | AWS Hong Kong |

| Compute Specifications |

1x vCPU 512MB RAM 12GB SSD |

1x vCPU 1GB RAM 8GB SSD |

||

| Internet Resources Provider | VNPT | AWS Singapore | AWS India | AWS Hong Kong |

| Internet Specifications |

1x IPv4 IP Address 100Mbps Bandwidth |

1x IPv4 IP Address At least 100Mbps Bandwidth |

||

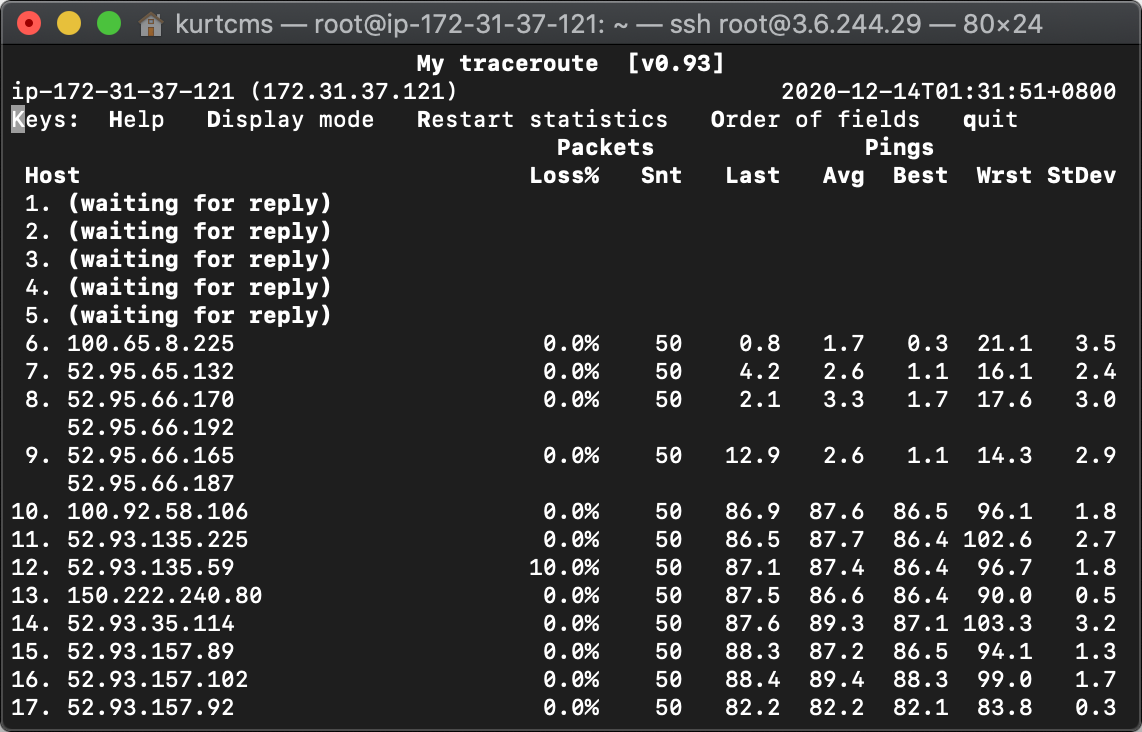

| Internet IP Address | 123.30.136.206 | 13.251.37.98 | 3.6.244.29 | 18.163.161.217 |

Topology and Tools

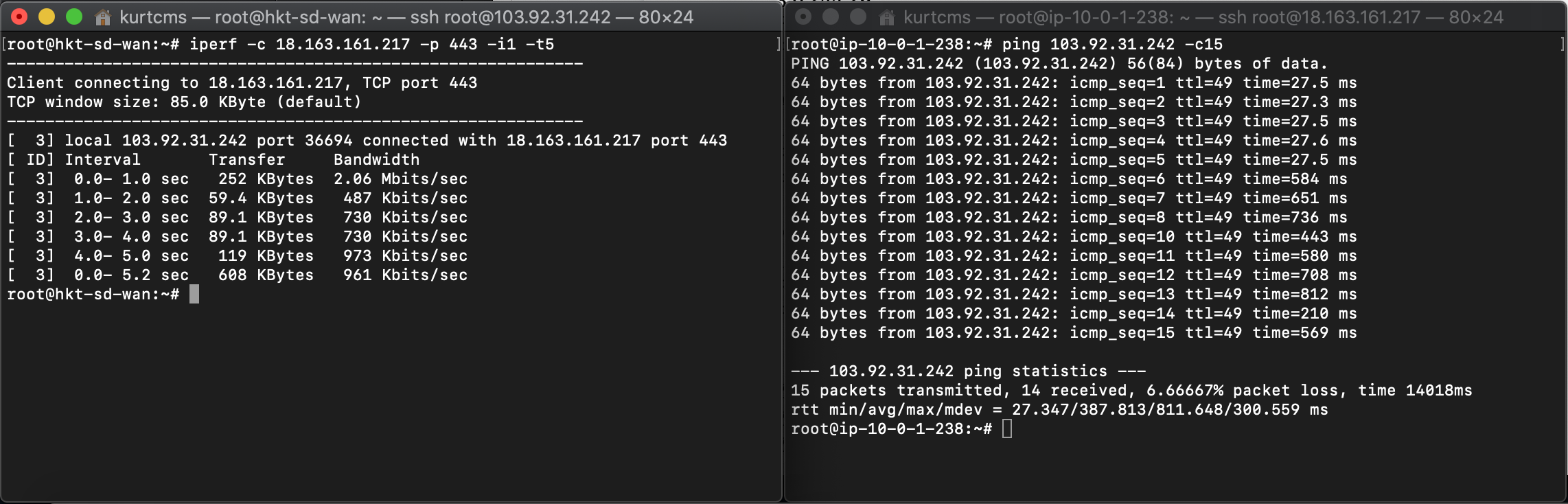

Evaluation topology was designed to be as simple as possible with two logical paths, one of which was a tunnelled path through the extended network between the compute in Hong Kong and the compute in the region of evaluation, and the other a direct path between the compute over the internet as a baseline reference. Ubuntu Linux was the Operating System for the AWS EC2 VPC and VinHost VPS for its simplicity, and the tunnelling protocol of choice was WireGuard which despite its being 4-year young is widely acclaimed for its simple configuration and user-friendliness, its performance over the alternatives such as IPsec (RFC 2421) and OpenVPN, and its relatively lean 4,000-line work-of-art code base which is merely 1% of that of IPsec and OpenVPN, that translates directly to less room for software bug and security vulnerability. WireGuard as such was quickly incorporated in the Linux and the FreeBSD kernel and was rapidly adapted by a number of commercial VPN service vendors. For its simplicity, performance advantage and relative stableness, it was the excellent tunnelling protocol for this evaluation.

WireGuard supports tunnelling on any TCP or UDP port, and since the technology vendor for our Managed SD-WAN Service used a proprietary tunnelling protocol that establishes encrypted tunnel on UDP 2426, the same protocol and port was used for WireGuard to emulate the SD-WAN encapsulated traffic. As for the baseline logical path that is the direct path between the compute over the internet, on ground that accompanying digital transformation and cloud adoption was the rising trend of Software as a Service (SaaS) which delivered software over the internet on HTTPS i.e. TCP 443 that constituted the increasing majority of enterprise network traffic and would most unlikely be throttled or firewalled for otherwise an enterprise network that did not allow HTTPS was a network of little use, TCP 443 was the protocol and port for the baseline logical path between the compute.

Once the protocol and ports on which the extended network would need to accelerate through its PoPs in the regions of the evaluation and the destination IP address in Hong Kong were decided, the extended network was notified as such for the configuration and subsequently provided the IP addresses of the PoPs at which the extended network service was provisioned for the evaluation. There had been a slight problem with the provisioning at the Mumbai PoP that eventually another Mumbai PoP IP address had to be used instead. Details are documented in the appendix.

Cron was the job scheduler for the evaluation, whereas the tried and trusted ping and the industry-standard iPerf were employed to evaluate the network performance of the extended network compared to the direct path between the compute over the internet. Ping was set to probe every 100 millisecond (ms) i.e. 10 times a second throughout the 7-day evaluation to measure the (RTT), the jitter which was the fluctuation in the RTT, as well as the packet loss. Standard output from ping is designed for readability rather than processability and as such a Bash script was coded to filter the standard ping output into Comma Separated Values (CSV) that are more computable than unstructured text. A second Bash script was coded to execute the ping command wrapped by the output-filtering Bash script if it was found not to be in process, and was scheduled every minute to ensure the ping or the wrapper Bash script would be executed again even if they for reason unknown were terminated prematurely to ensure a round-the-clock and round-the-week performance measurement with no manual intervention. Two ping sessions were recorded, one for each of the two logical paths.

A second set of cron jobs was scheduled to execute a 2-minute TCP iPerf session 4 times an hour, one for each of the two logical paths, with each executed at different time, to measure the throughput between the compute and to insert network loading and traffic to the two paths that mirrored more closely a production environment with network traffic. The original plan was to have a round-the-clock TCP iPerf session that reported bandwidth measure every minute for a round-the-clock probe. Such intensive probing however violated the Network Stress Test policy, and requires prior approval from the AWS Simulated Events Team. Together with the fact that AWS EC2 charged outbound data transfer that for a 7-day round-the-clock evaluation would render the whole enterprise a costly exercise, the iPerf session was as such reduced to 2-minute each and 4 times an hour instead. The iPerf sessions were instructed to produce output in CSV and therefore there was no need to code a Bash script to post-process the output before recording.

A third set of cron jobs was scheduled to start the two aforementioned sets of cron jobs on the date the evaluation began and stopped them at the end of the evaluation.

| Evaluation Date | Dec 10th to Dec 17th | Dec 7th to 14th | ||

|---|---|---|---|---|

| Region | Vietnam | Cambodia | India | Hong Kong |

| Extended Network PoP | Ho Chi Minh City | Singapore | Mumbai | Hong Kong |

| Compute Resources Provider | VinaHost Vietnam in VDC2 NTMK | AWS Singapore | AWS India | AWS Hong Kong |

| Compute Specifications |

1x vCPU 512MB RAM 12GB SSD |

1x vCPU 1GB RAM 8GB SSD |

||

| Internet Resources Provider | VNPT | AWS Singapore | AWS India | AWS Hong Kong |

| Internet Specifications |

1x IPv4 IP Address 100Mbps Bandwidth |

1x IPv4 IP Address At least 100Mbps Bandwidth |

||

| Internet IP Address | 123.30.136.206 | 13.251.37.98 | 3.6.244.29 | 18.163.161.217 |

| Operating System | Ubuntu | |||

| Logical Network Paths |

End-to-End WireGuard Tunnel UDP 2426 Direct Internet on TCP 443 |

|||

| Evaluation Tools |

Cron for job scheduling Bash to filter the standard Ping output to CSV Ping for measuring the RTT, jitter and packet loss< iPerf for measuring throughput and insert traffic to the path |

|||

Bash and Cron

Below is the Bash script that post-processes the standard Ping output into CSV. Be sure to add execute permission to the script for otherwise it will not be allowed to run.

Pingc Bash Script

#!/bin/bash

trap echo 0

ping $* | while read line; do

[[ "$line" =~ ^PING ]] && continue

[[ ! "$line" =~ "bytes from" ]] && continue

dt=$(date +'%Y%m%d%H%M%S')

et=$(date +%s)

addr=${line##*bytes from }

addr=${addr%%:*}

rtt=${line##*time=}

rtt=${rtt%% *}

echo -n "$dt,$et,$addr,$rtt"

echo

done

Adding execute permission to the Pingc bash script.

$ chmod +x /app/pingc/pingc.sh

Below is the Bash script that checks if a command is in process and will execute it if it is not. Be sure to add execute permission to the script for otherwise it will not be allowed to run.

Daemonc Bash Script

#!/bin/bash

if ! ps aux | grep -v grep | grep -v $0 | grep "$*" 2>&1 > /dev/null; then

$*

fi

exit

Adding execute permission to the Daemonc bash script.

$ chmod +x /app/daemonc/daemonc.sh

Below are the cron jobs that execute the Bash scripts and orchestrate the entire performance.

Crontab (AWS Hong Kong)

* * * * * /app/daemonc/daemonc.sh iperf -s -D -p 443

Crontab (AWS Mumbai)

# * * * * * /app/daemonc/daemonc.sh /app/pingc/pingc.sh -i0.1 10.1.1.1 >> /root/ping-mb-10.1.1.1.csv

# * * * * * /app/daemonc/daemonc.sh /app/pingc/pingc.sh -i0.1 45.43.45.141 >> /root/ping-mb-45.43.45.141.csv

# * * * * * /app/daemonc/daemonc.sh /app/pingc/pingc.sh -i0.1 18.163.161.217 >> /root/ping-mb-18.163.161.217.csv

# 0,15,30,45 * * * * iperf -c 10.1.1.1 -p 443 -y C -t60 >> /root/iperf-mb-10.1.1.1.csv

# 2,17,32,47 * * * * iperf -c 18.163.161.217 -p 443 -y C -t60 >> /root/iperf-mb-18.163.161.217.csv

0 0 7 12 * crontab -l | perl -nle 's/^#\s*([0-9*])/$1/;print' | crontab

# 0 0 14 12 * pkill -f pingc

# 0 0 14 12 * crontab -l | perl -nle 's/^([^#])/# $1/;print' | crontab

Crontab (AWS Singapore)

# * * * * * /app/daemonc/daemonc.sh /app/pingc/pingc.sh -i0.1 10.1.1.1 >> /root/ping-sg-10.1.1.1.csv

# * * * * * /app/daemonc/daemonc.sh /app/pingc/pingc.sh -i0.1 129.227.67.94 >> /root/ping-sg-129.227.67.94.csv

# * * * * * /app/daemonc/daemonc.sh /app/pingc/pingc.sh -i0.1 18.163.161.217 >> /root/ping-sg-18.163.161.217.csv

# 5,20,35,50 * * * * iperf -c 10.1.1.1 -p 443 -y C -t60 >> /root/iperf-sg-10.1.1.1.csv

# 7,22,37,52 * * * * iperf -c 18.163.161.217 -p 443 -y C -t60 >> /root/iperf-sg-18.163.161.217.csv

0 0 7 12 * crontab -l | perl -nle 's/^#\s*([0-9*])/$1/;print' | crontab

# 0 0 14 12 * pkill -f pingc

# 0 0 14 12 * crontab -l | perl -nle 's/^([^#])/# $1/;print' | crontab

Crontab (VinaHost.vn Ho Chi Minh City)

# * * * * * /app/daemonc/daemonc.sh /app/pingc/pingc.sh -i0.1 10.1.1.1 >> /root/ping-hc-10.1.1.1.csv

# * * * * * /app/daemonc/daemonc.sh /app/pingc/pingc.sh -i0.1 122.10.140.2 >> /root/ping-hc-122.10.140.2.csv

# * * * * * /app/daemonc/daemonc.sh /app/pingc/pingc.sh -i0.1 18.163.161.217 >> /root/ping-hc-18.163.161.217.csv

# 10,25,40,55 * * * * iperf -c 10.1.1.1 -p 443 -y C -t60 >> /root/iperf-hc-10.1.1.1.csv

# 12,27,42,57 * * * * iperf -c 18.163.161.217 -p 443 -y C -t60 >> /root/iperf-hc-18.163.161.217.csv

0 0 10 12 * crontab -l | perl -nle 's/^#\s*([0-9*])/$1/;print' | crontab

# 0 0 17 12 * pkill -f pingc

# 0 0 17 12 * crontab -l | perl -nle 's/^([^#])/# $1/;print' | crontab

System Configurations

The Ubuntu Operating Systems on all the compute needed to be properly configured with all the required packages installed before the evaluation could proceed. As Cron was the orchestrator managing the start and the end of the evaluation by beginning and ending at the specific times the sets of cron jobs that managed in turn Ping and iPerf. Above all else was to ensure that the system clocks were aligned to the same time zone between the Ubuntu on all of the compute. The time zone of choice was of course GMT/UTC +8 Hong Kong and this was done simply by the following command on a Secure Shell (SSH, RFC 4523).

Aligning Time Zones

$ timedatectl set-timezone Asia/Hong_Kong

All the required system and security updates were to be installed before installing other packages required for the evaluation.

System Upgrade

$ apt update && apt upgrade

For the evaluation, as previously discussed, WireGuard, iPerf and other miscellaneous tools i.e. ifconfig would be required. Bash, ping, cron on the other hand were installed by default and required no manual installation.

Installing WireGuard and Tools

$ apt install wireguard iperf net-tools

AWS offered a basic layer 3 firewall for all of its EC2 VPC that if forgotten would do more harm than help. For this evaluation both UDP 2426 and TCP 443 would need to be allowed on all of the compute.

WireGuard Configurations

With the system set, tools installed and firewall configured, first among all was to configure WireGuard and had an encrypted tunnel between the compute in the regions of evaluation as WireGuard clients, and the compute in Hong Kong as WireGuard server, in a hub-spoke topology as previously discussed. A set of private and public keys for each of the WireGuard instances would need to be generated.

WireGuard Private and Public Keys

$ cd /etc/wireguard && umask 077; wg genkey | tee privatekey | wg pubkey > publickey

$ cat /etc/wireguard/privatekey /etc/wireguard/publickey

The private keys were of course private whereas the public keys were needed for the WireGuard instances on the other side of the tunnel to verify that the instance on this side was the intended instance for the tunnelling. The public keys along with the IP address assignment and the routes that were to be propagated to the other WireGuard instances in the private network needed to be edited into the WireGuard configuration. This could be done by the tried and true Nano editor.

Editing WireGuard Configurations

$ nano wg0.conf

The WireGuard configurations for each of the WireGuard instances are listed below.

WireGuard AWS Hong Kong

[Interface]

PrivateKey = (redacted)

Address = 10.1.1.1/24

ListenPort = 2426

[Peer]

PublicKey = (redacted)

AllowedIPs = 10.1.1.2/32

[Peer]

PublicKey = (redacted)

AllowedIPs = 10.1.1.3/32

[Peer]

PublicKey = (redacted)

AllowedIPs = 10.1.1.4/32

WireGuard AWS Mumbai

[Interface]

PrivateKey = (redacted)

Address = 10.1.1.2/24

ListenPort = 2426

[Peer]

PublicKey = (redacted)

Endpoint = 45.43.45.141:2426

AllowedIPs = 10.1.1.1/24

WireGuard AWS Singapore

[Interface]

PrivateKey = (redacted)

Address = 10.1.1.3/24

ListenPort = 2426

[Peer]

PublicKey = (redacted)

Endpoint = 129.227.67.94:2426

AllowedIPs = 10.1.1.1/24

WireGuard VinaHost.vn Ho Chi Minh City

[Interface]

PrivateKey = (redacted)

Address = 10.1.1.4/24

ListenPort = 2426

[Peer]

PublicKey = (redacted)

Endpoint = 122.10.140.2:2426

AllowedIPs = 10.1.1.1/24

Once the configurations were set for each of the WireGuard instances, WireGuard might be initiated with a single command.

Enabling WireGuard

$ wg-quick up wg0

The WireGuard tunnels would be established in seconds and information about the tunnels could be commissioned with another command.

Command for Information about the WireGuard Tunnel

$ wg

Output similar to the below would then be printed.

Information about the WireGuard Tunnel

interface: wg0

public key: (redacted)

private key: (redacted)

listening port: 2426

peer: PRz9kXv9hbUcqb+4ZQJETZWxKg0rTTQ1E6RdV/ut2Wc=

endpoint: 129.227.135.102:42281

allowed ips: 10.1.1.4/32

latest handshake: 1 minute, 34 seconds ago

transfer: 75.92 GiB received, 2.81 GiB sent

peer: uULItBk3pdUortpIOAIxJe5ojD8l4nQuDC7vKzrhYD0=

endpoint: 129.227.135.102:40741

allowed ips: 10.1.1.2/32

latest handshake: 18 hours, 35 minutes, 11 seconds ago

transfer: 384.25 GiB received, 2.71 GiB sent

peer: ECyKD70Day4Bx1wEnHLbfEKUslVLJSFNM/BXTGRrxyI=

endpoint: 129.227.135.102:44055

allowed ips: 10.1.1.3/32

latest handshake: 18 hours, 35 minutes, 51 seconds ago

transfer: 437.66 GiB received, 3.02 GiB sent

With the extended network PoP provisioned, the systems in the regions of evaluation set, the WireGuard tunnel established between all of the instances, the evaluation might begin.

Result

Traceroute

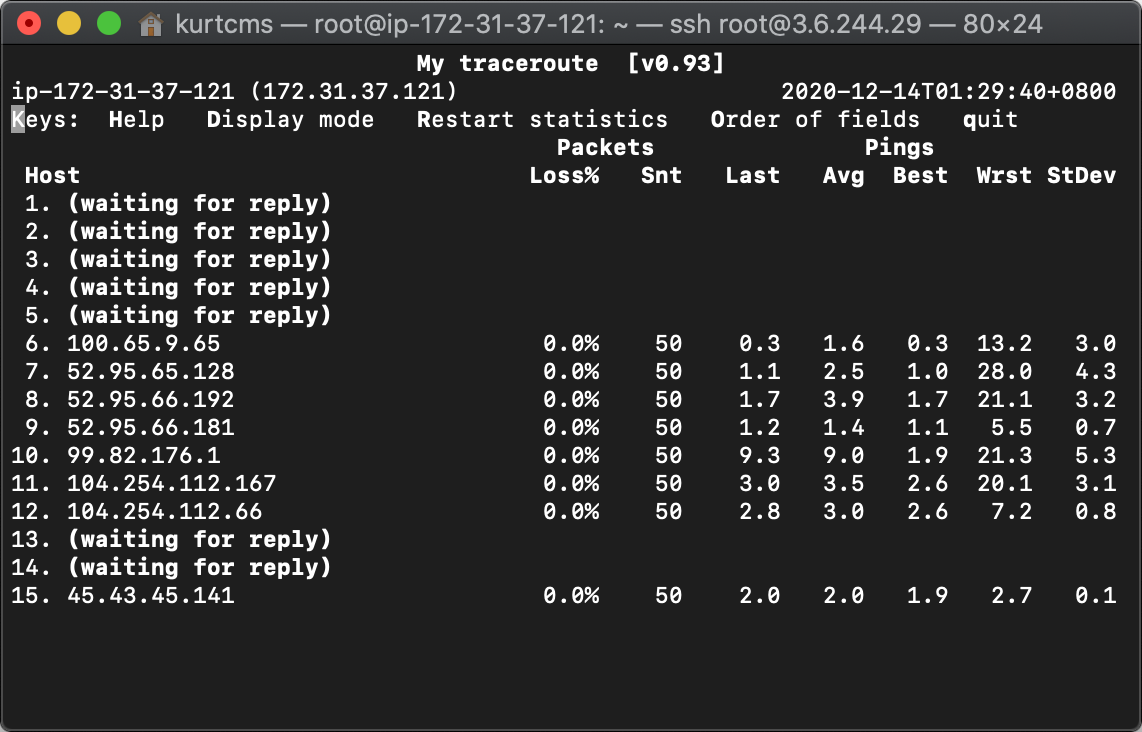

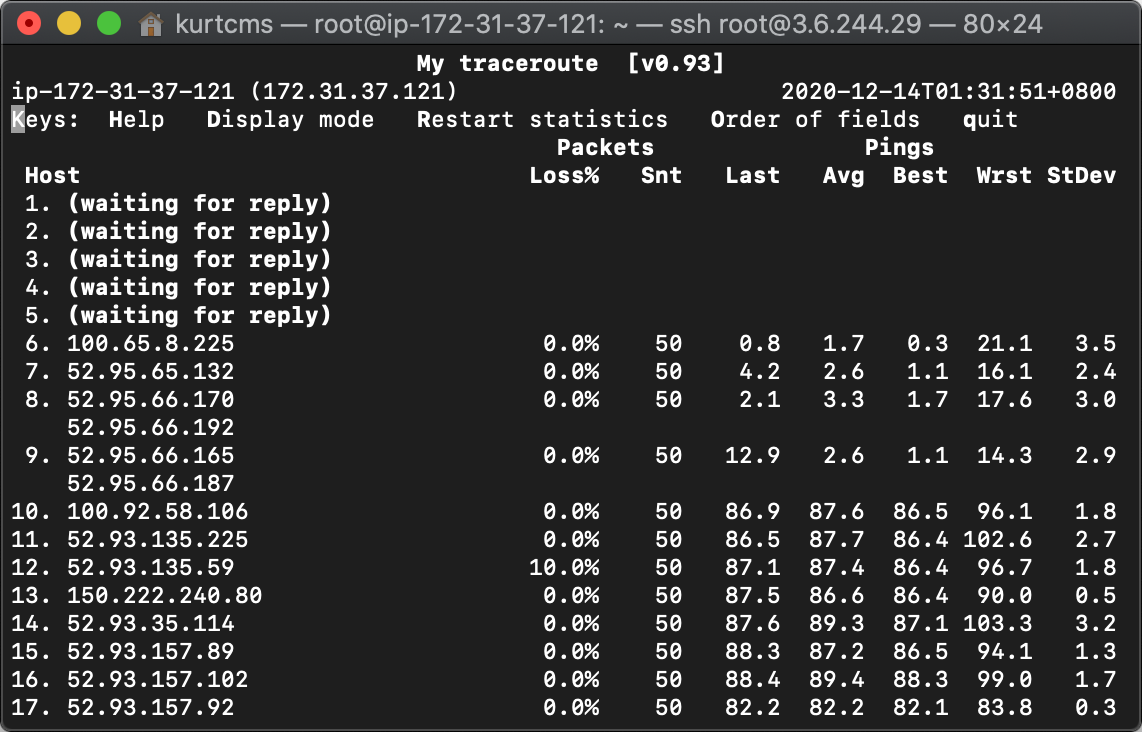

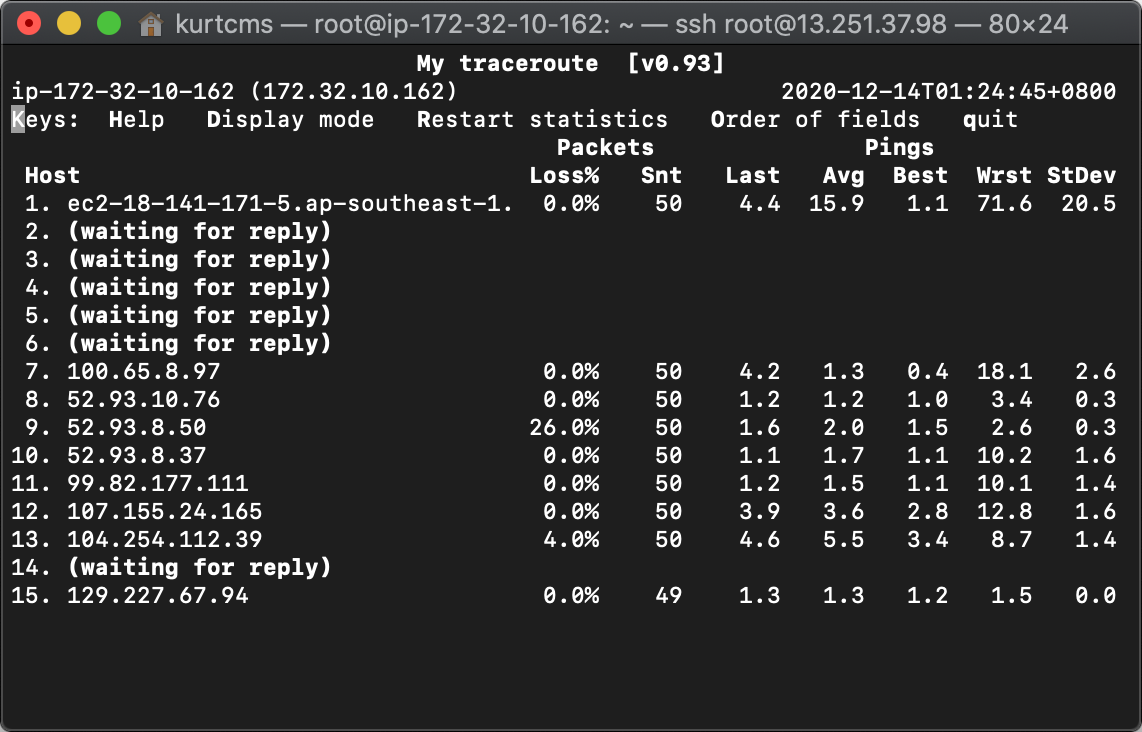

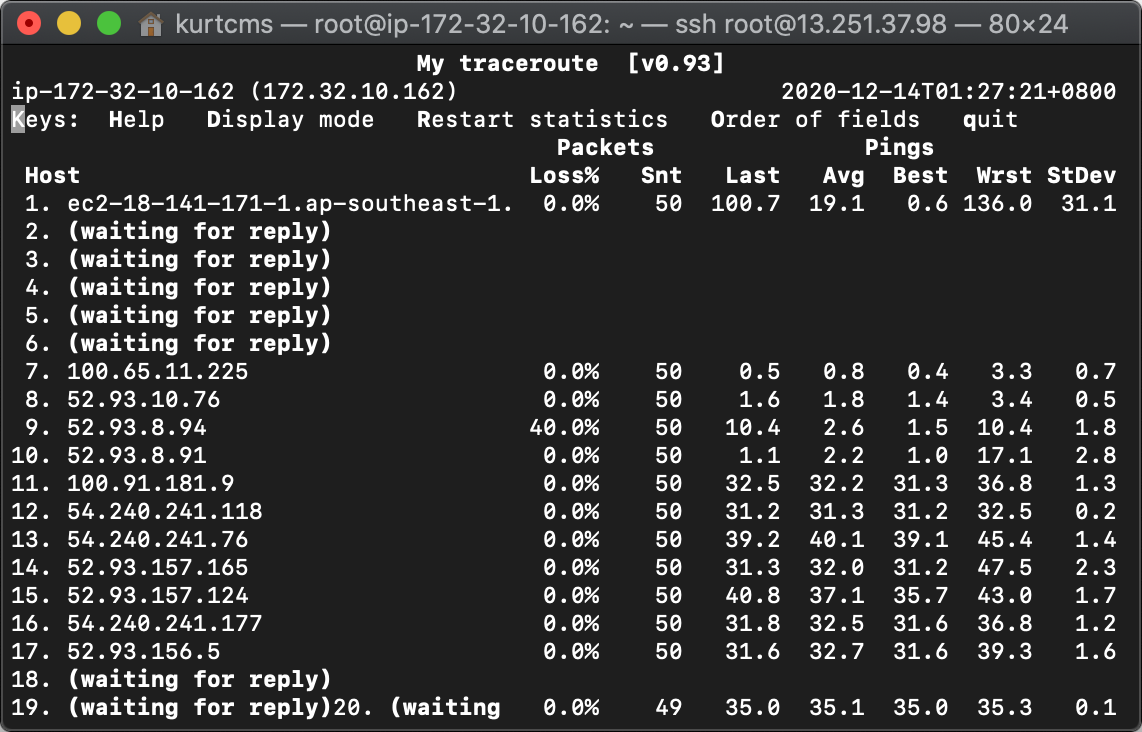

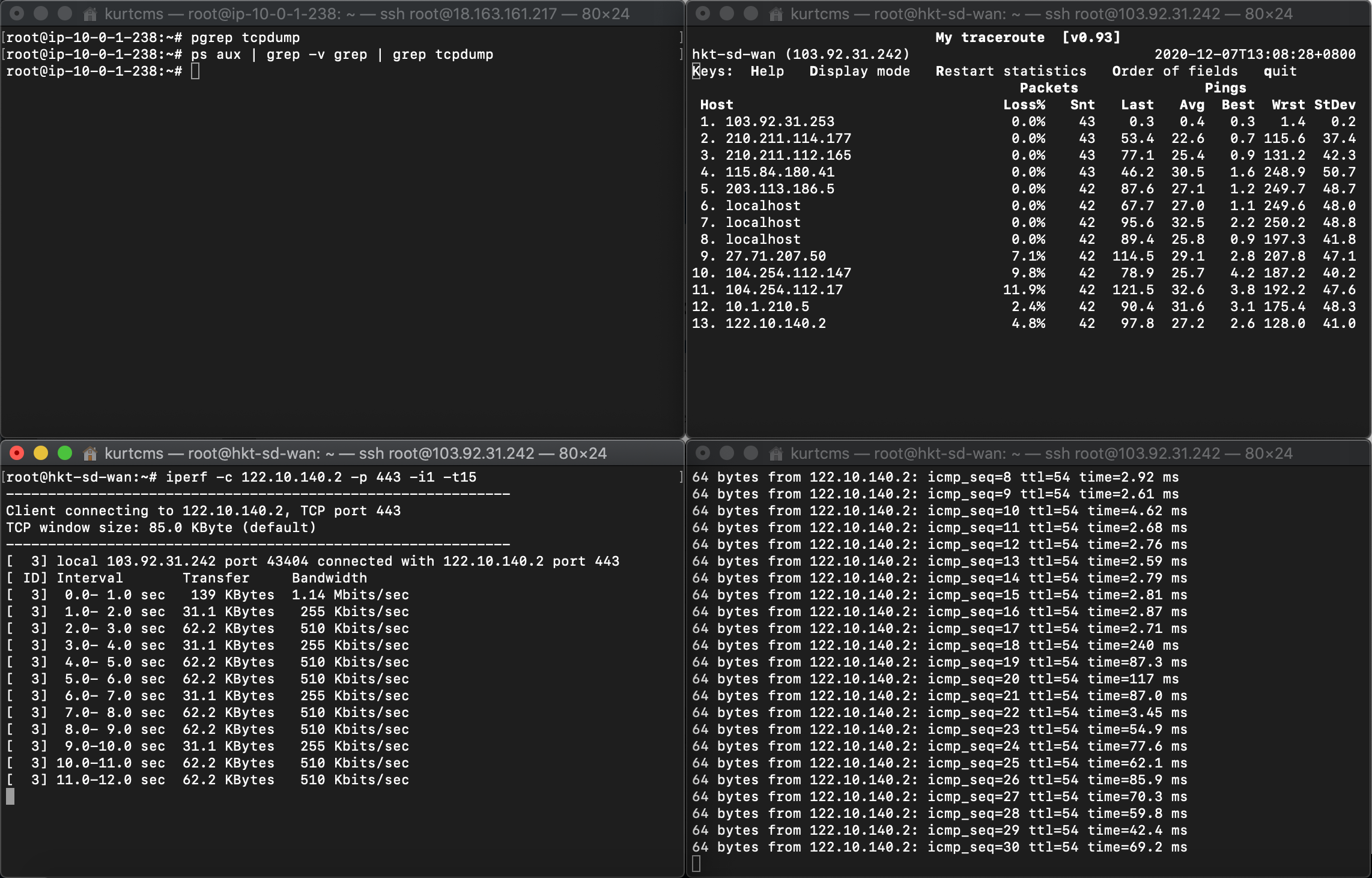

Results from MTR showing the number of hop in the path, and the IP addresses of each of the hop, between the compute in the regions of evaluation and the respective Zenlayer PoP, and between the compute and the destination IP address in AWS Hong Kong, are listed below for reference.

| From VinaHost.vn HCMC to Extended Network HCMC PoP | From VinaHost.vn HCMC to AWS Hong Kong |

|

|

| From AWS Mumbai to Extended Network Mumbai PoP | From AWS Mumbai to AWS Hong Kong |

|

|

| From AWS Singapore to Extended Network Singapore PoP | From AWS Singapore to AWS Hong Kong |

|

|

Analysis

For Ping was set to probe every 100 millisecond (ms) i.e. 10 times a second to measure the RTT, the jitter which was the fluctuation in the RTT, as well as the packet received or lost in percentage, throughout the 7-day evaluation, there would be, 6,048,000 Ping data points per compute given 10 times a second x 60 seconds a minute x 60 minutes an hour x 24 hours a day x 7 days a week, together with 672 iPerf measurements per compute on a frequency of 4 times an hour x 24 hours a day x 7 days a week, to be analysed. The amount of data of this scale required a more efficient, more customisable and more purpose-built tool for data analysis.

Python with its runtime efficiency, its intuitive syntax, and most importantly the huge amount of readily available data science libraries such as NumPy, Pandas and Matplotlib, that are specifically designed to process massive amount of data that conventional spreadsheet applications would not be able to operate on, was the tool of the choice to process the altogether 18 millions plus data points collected from this evaluation.

A Python script importing NumPy and Pandas was coded to read the Ping data points in DataFrame and to process the DataFrame to return the hourly, daily and overall RTT in mean, median, standard deviation, minimum and maximum for a measure of the latency, jitter and packet loss, as well as an overall picture of the data distribution. iPerf results were also processed to return the hourly, daily and overall throughput in average in Megabits per second (Mb/s).

| Network Measure | RTT Mean | RTT Median | RTT Standard Deviation | RTT Minimum | RTT Maximum | iPerf Mean |

|---|---|---|---|---|---|---|

| Network Performance | Latency (ms) | Skewness (with respect to mean) | Jitter (ms) | Minimum Latency (ms) | Maximum Latency (ms) | Throughput (Mb/s) |

Matplotlib was imported to chart the hourly average RTT with the standard deviation from the RTT plotted as lower and upper band, for a visual illustration of the range of the jitter, bounded by the minimum and maximum, to keep the range within observation; together with a second chart of the average RTT from the compute in the regions of evaluation to the respective extended network PoP; followed by a third chart of the packet received in percentage (a measure of packet loss) by dividing the hourly Ping data points received, by the hourly Ping data points sent; and ended with a forth chart of the throughput in Mb/s ranged also by the standard deviation bounded by the observed minimum and maximum, for a visual representation of the network performance during the evaluation period.

Below is the Python script. Be sure to add execute permission to the script for otherwise it will not be allowed to run.

import sys

import csv

import datetime

import numpy as np

import pandas as pd

from matplotlib import pyplot as plt

class city:

'''

Set the column headers for the ping and iPerf data,

the date and time for the start and end of the evaluation,

and a working directory that will be used throughout the class.

'''

pcol = ['Date', 'Epoch', 'Dst IP', 'RTT']

icol = ['Date', 'Src IP', 'Src Port', 'Dst IP', 'Dst Port', 'Int', 'Bytes', 'Bits/s']

ds = datetime.datetime.strptime('2020-12-07 00:00:00', '%Y-%m-%d %H:%M:%S')

de = datetime.datetime.strptime('2020-12-14 00:00:00', '%Y-%m-%d %H:%M:%S')

path = sys.path[0] + '/'

def __init__(self, p_wg, p_zl, p_hk, i_wg, i_hk):

'''

Read the ping and iPerf data given their filenames

'''

self.p_wg = pd.read_csv(self.path + p_wg + '.csv',

names=self.pcol, header=None, index_col=0,

parse_dates=True, squeeze=False)

self.p_zl = pd.read_csv(self.path + p_zl + '.csv',

names=self.pcol, header=None, index_col=0,

parse_dates=True, squeeze=False)

self.p_hk = pd.read_csv(self.path + p_hk + '.csv',

names=self.pcol, header=None, index_col=0,

parse_dates=True, squeeze=False)

self.i_wg = pd.read_csv(self.path + i_wg + '.csv',

names=self.icol, header=None, index_col=0,

parse_dates=True, squeeze=False)

self.i_hk = pd.read_csv(self.path + i_hk + '.csv',

names=self.icol, header=None, index_col=0,

parse_dates=True, squeeze=False)

def __crunch(self, d, d_int, n):

'''

Compute and return the mean, median, standard deviation,

min and max values given the duration and the data type.

'''

c = []

i = 0

b = 1000000

while i < n:

if d_int > 0 and d_int < 8 :

# Hourly

ts = (self.ds + datetime.timedelta(hours = i,

days = d_int - 1)).strftime('%Y-%m-%d %H:%M:%S')

te = (self.ds + datetime.timedelta(hours = i + 1,

days = d_int - 1)).strftime('%Y-%m-%d %H:%M:%S')

pktb = 36000

elif d_int == 0:

# Daily

ts = (self.ds + datetime.timedelta(

days = i)).strftime('%Y-%m-%d %H:%M:%S')

te = (self.ds + datetime.timedelta(

days = i + 1)).strftime('%Y-%m-%d %H:%M:%S')

pktb = 864000

elif d_int == 8:

# Weekly

ts = self.ds.strftime('%Y-%m-%d %H:%M:%S')

te = self.de.strftime('%Y-%m-%d %H:%M:%S')

pktb = 6048000

else:

sys.exit('The date integer provided is out of scope')

if 'RTT' in d.columns:

# Ping data

c.append(

{

'Date': ts,

'RTT Mean': d.loc[ts : te, 'RTT'].mean(),

'RTT Median': d.loc[ts : te, 'RTT'].median(),

'RTT Std Dev': d.loc[ts : te, 'RTT'].std(),

'RTT Min': d.loc[ts : te, 'RTT'].min(),

'RTT Max': d.loc[ts : te, 'RTT'].max(),

'Pkt Received': d.loc[ts : te, 'RTT'].count()/pktb

}

)

elif 'Bits/s' in d.columns:

# iPerf data

c.append(

{

'Date': ts,

'Bits/s Mean': d.loc[ts : te, 'Bits/s'].mean()/b,

'Bits/s Median': d.loc[ts : te, 'Bits/s'].median()/b,

'Bits/s Std Dev': d.loc[ts : te, 'Bits/s'].std()/b,

'Bits/s Min': d.loc[ts : te, 'Bits/s'].min()/b,

'Bits/s Max': d.loc[ts : te, 'Bits/s'].max()/b,

}

)

else:

sys.exit('The dataset provided is out of scope')

i = i + 1

c = pd.DataFrame(c)

c.set_index('Date', inplace = True)

return c

def daily(self, d):

'''

Return the daily RTT and throughput

'''

return self.__crunch(d, 0, 6)

def hourly(self, d):

'''

Return the hourly RTT and throughput

'''

h = pd.DataFrame([])

p = 1

q = 7

while p < q:

h = h.append(self.__crunch(d, p, 24))

p = p + 1

return h

def weekly(self, d):

'''

Return the weekly RTT and throughput

'''

return self.__crunch(d, 8, 1)

def hourlyd(self, d, d_int):

'''

Return the hourly RTT and throughput of a given date

'''

if d_int > 0:

return self.__crunch(d, d_int, 24)

else:

sys.exit('The date integer provided is out of scope')

def ex(self, p1, p2, p3, i1, i2, tl, l1, l2, l3, y1, y2, y3, x3, plot):

'''

Export the given RTT and throughput results and

chart them in four subplots:

'''

p1.to_csv(self.path + tl + ' - ' + y1 + ' - ' + l1 + '.csv',

index=False, float_format='%.4f')

p2.to_csv(self.path + tl + ' - ' + y1 + ' - ' + l2 + '.csv',

index=False, float_format='%.4f')

p3.to_csv(self.path + tl + ' - ' + y1 + ' - ' + l3 + '.csv',

index=False, float_format='%.4f')

i1.to_csv(self.path + tl + ' - ' + y3.replace('/', ' per second')

+ ' - ' + l1 + '.csv',

index=False, float_format='%.4f')

i2.to_csv(self.path + tl + ' - ' + y3.replace('/', ' per second')

+ ' - ' + l2 + '.csv',

index=False, float_format='%.4f')

if plot == True:

fig, (mean, zmean, pkt, ipf) = plt.subplots(4, 1,

sharex=True,

figsize=(10, 10))

# Subplot #1: series #1

mean.plot(p1.index, p1.loc[:, 'RTT Mean'],

marker='.', alpha=0.5, linewidth=0.5, label=l1)

mean.fill_between(p1.index,

pd.concat([p1.loc[:, 'RTT Mean'] - p1.loc[:, 'RTT Std Dev'],

p1.loc[:, 'RTT Min']], axis=1).max(axis=1),

pd.concat([p1.loc[:, 'RTT Mean'] + p1.loc[:, 'RTT Std Dev'],

p1.loc[:, 'RTT Max']], axis=1).min(axis=1), alpha=0.1)

# Subplot #2: series #1

zmean.plot(p3.index, p3.loc[:, 'RTT Mean'], color='green',

marker='.', alpha=0.5, linewidth=0.5, label=l3)

# Subplot #3

pkt.plot(p1.index, p1.loc[:, 'Pkt Received'],

marker='.', alpha=0.5, linewidth=0.5, label=l1)

# Subplot #4: series #1

ipf.plot(i1.index, i1.loc[:, 'Bits/s Mean'],

marker='.', alpha=0.5, linewidth=0.5, label=l1)

ipf.fill_between(i1.index,

pd.concat([i1.loc[:, 'Bits/s Mean'] - i1.loc[:, 'Bits/s Std Dev'],

i1.loc[:, 'Bits/s Min']], axis=1).max(axis=1),

pd.concat([i1.loc[:, 'Bits/s Mean'] + i1.loc[:, 'Bits/s Std Dev'],

i1.loc[:, 'Bits/s Max']], axis=1).min(axis=1), alpha=0.1)

# Subplot #1: series #2

mean.plot(p2.index, p2.loc[:, 'RTT Mean'],

marker='.', alpha=0.5, linewidth=0.5, label=l2)

mean.fill_between(p2.index,

pd.concat([p2.loc[:, 'RTT Mean'] - p2.loc[:, 'RTT Std Dev'],

p2.loc[:, 'RTT Min']], axis=1).max(axis=1),

pd.concat([p2.loc[:, 'RTT Mean'] + p2.loc[:, 'RTT Std Dev'],

p2.loc[:, 'RTT Max']], axis=1).min(axis=1), alpha=0.1)

# Subplot #3: series #2

pkt.plot(p2.index, p2.loc[:, 'Pkt Received'],

marker='.', alpha=0.5, linewidth=0.5, label=l2)

# Subplot #4: series #2

ipf.plot(i2.index, i2.loc[:, 'Bits/s Mean'],

marker='.', alpha=0.5, linewidth=0.5, label=l2)

ipf.fill_between(i2.index,

pd.concat([i2.loc[:, 'Bits/s Mean'] - i2.loc[:, 'Bits/s Std Dev'],

i2.loc[:, 'Bits/s Min']], axis=1).max(axis=1),

pd.concat([i2.loc[:, 'Bits/s Mean'] + i2.loc[:, 'Bits/s Std Dev'],

i2.loc[:, 'Bits/s Max']], axis=1).min(axis=1), alpha=0.1)

# Chart options

fig.suptitle(tl)

mean.legend()

mean.set_ylabel(y1)

zmean.legend()

zmean.set_ylabel(y1)

zmean.set_yticks(np.arange(0, 5, 1))

pkt.legend()

pkt.set_yticks(np.arange(0.95, 1, 0.01))

pkt.set_ylabel(y2)

ipf.legend()

ipf.set_ylabel(y3)

ipf.set_xlabel(x3)

if len(i1.index) > 24:

ipf.xaxis.set_major_locator(plt.MaxNLocator(24))

plt.xticks(rotation=45, ha='right')

plt.tight_layout()

plt.savefig(tl + '.png')

#plt.show()

if __name__ == '__main__':

'''

Create the city objects given their respective ping and iPerf

data, compute and export the hourly and weekly RTT and throughput,

and chart the hourly RTT and throughput.

'''

mb = city('ping-mb-10.1.1.1',

'ping-mb-45.43.45.141',

'ping-mb-18.163.161.217',

'iperf-mb-10.1.1.1',

'iperf-mb-18.163.161.217')

sg = city('ping-sg-10.1.1.1',

'ping-sg-129.227.67.94',

'ping-sg-18.163.161.217',

'iperf-sg-10.1.1.1',

'iperf-sg-18.163.161.217')

hc = city('ping-hc-10.1.1.1',

'ping-hc-122.10.140.2',

'ping-hc-18.163.161.217',

'iperf-hc-10.1.1.1',

'iperf-hc-18.163.161.217')

'''

Adjust the date and time for the start and end of

the evaluation for Ho Chi Minh City

'''

hc.ds = datetime.datetime.strptime('2020-12-10 00:00:00', '%Y-%m-%d %H:%M:%S')

mb.ex(mb.hourly(mb.p_wg),

mb.hourly(mb.p_hk),

mb.hourly(mb.p_zl),

mb.hourly(mb.i_wg),

mb.hourly(mb.i_hk),

'Mumbai (Hourly from 2020-12-07 to 2020-12-14)',

'Extended Network Service',

'Internet',

'AWS Mumbai to Extended Network Mumbai PoP',

'RTT (ms)',

'Pkt Received (%)',

'Throughput (Mbits/s)',

'Date and Time',

True)

sg.ex(sg.hourly(sg.p_wg),

sg.hourly(sg.p_hk),

sg.hourly(sg.p_zl),

sg.hourly(sg.i_wg),

sg.hourly(sg.i_hk),

'Singapore (Hourly from 2020-12-07 to 2020-12-14)',

'Extended Network Service',

'Internet',

'AWS Singapore to Extended Network Singapore PoP',

'RTT (ms)',

'Pkt Received (%)',

'Throughput (Mbits/s)',

'Date and Time',

True)

hc.ex(hc.hourly(hc.p_wg),

hc.hourly(hc.p_hk),

hc.hourly(hc.p_zl),

hc.hourly(hc.i_wg),

hc.hourly(hc.i_hk),

'Ho Chi Minh City (Hourly from 2020-12-10 to 2020-12-17)',

'Extended Network Service',

'Internet',

'VinaHost.vn Ho Chi Minh City to Extended Network Ho Chi Minh City PoP',

'RTT (ms)',

'Pkt Received (%)',

'Throughput (Mbits/s)',

'Date and Time',

True)

mb.ex(mb.weekly(mb.p_wg),

mb.weekly(mb.p_hk),

mb.weekly(mb.p_zl),

mb.weekly(mb.i_wg),

mb.weekly(mb.i_hk),

'Mumbai (From 2020-12-07 to 2020-12-14)',

'Extended Network Service',

'Internet',

'AWS Mumbai to Extended Network Mumbai PoP',

'RTT (ms)',

'Pkt Received (%)',

'Throughput (Mbits/s)',

'Date and Time',

False)

sg.ex(sg.weekly(sg.p_wg),

sg.weekly(sg.p_hk),

sg.weekly(sg.p_zl),

sg.weekly(sg.i_wg),

sg.weekly(sg.i_hk),

'Singapore (Hourly from 2020-12-10 to 2020-12-17)',

'Extended Network Service',

'Internet',

'AWS Singapore to Extended Network Singapore PoP',

'RTT (ms)',

'Pkt Received (%)',

'Throughput (Mbits/s)',

'Date and Time',

False)

hc.ex(hc.weekly(hc.p_wg),

hc.weekly(hc.p_hk),

hc.weekly(hc.p_zl),

hc.weekly(hc.i_wg),

hc.weekly(hc.i_hk),

'Ho Chi Minh City (From 2020-12-07 to 2020-12-14)',

'Extended Network Service',

'Internet',

'VinaHost.vn Ho Chi Minh City to Extended Network Ho Chi Minh City PoP',

'RTT (ms)',

'Pkt Received (%)',

'Throughput (Mbits/s)',

'Date and Time',

False)

Adding execute permission to the analysis python script.

$ chmod +x /root/analysis.py

Finding

Network performance of the extended network as previously discussed for each of the regions of evaluation would be compared against the direct internet path. Also added to the comparison was a reference PoP-to-PoP network performance of the MPLS IPVPN in different classes of service (CoS) in the region for it would be the alternative, albeit premium as previously discussed, middle-mile backbone network should no extended network fulfil the performance requirement.

The chart as previously discussed plots the hourly average RTT with the standard deviation from the RTT plotted as lower and upper band, for a visual illustration of the range of the jitter, bounded by the minimum and maximum, to keep the range within observation; together with a second chart of the average RTT from the compute in the regions of evaluation to the respective extended network PoP; followed by a third chart of the packet received in percentage (a measure of packet loss) by dividing the hourly Ping data points received, by the hourly Ping data points sent; and ended with a forth chart of the throughput in Mb/s ranged also by the standard deviation bounded by the observed minimum and maximum, for a visual representation of the network performance during the evaluation period.

Ho Chi Minh City

With the extended network, jitter from Ho Chi Minh City to Hong Kong dropped by as much as 69% from 5.01ms to 1.66ms while latency increased by 22% from 26.30ms to 32.10ms over direct internet with packet loss holding steady at 0.79%.

| From Ho Chi Minh City to Hong Kong | Extended Network | Direct Internet | MPLS IPVPN PoP-to-PoP |

|---|---|---|---|

| Latency (ms) | 32.10 | 26.30 | 53 |

| Jitter (ms) | 1.66 | 5.01 | <15 (Gold) |

| Packet Received (%) | 99.21 | 99.03 |

>= 99.90% (Gold) >= 99.85% (Silver+) >= 99.40% (Silver) >= 99.00% (Bronze) |

Mumbai

With the extended network, jitter from Mumbai to Hong Kong dropped by as much as 76% from 5.87ms to 1.42ms while latency increased by 14% from 82.97ms to 94.58ms over direct internet with packet loss holding steady at 0.5%.

| From Mumbai to Hong Kong | Extended Network | Direct Internet | MPLS IPVPN PoP-to-PoP |

|---|---|---|---|

| Latency (ms) | 94.58 | 82.97 | NA |

| Jitter (ms) | 1.42 | 5.87 | NA |

| Packet Received (%) | 99.48 | 99.51 | NA |

Singapore

With the extended network, jitter from Singapore to Hong Kong dropped by as much as 53% from 2.53ms to 1.19ms compared to with direct internet while latency and packet loss were holding steady at 35ms and 0.5-0.7% respectively.

| From Singapore to Hong Kong | Extended Network | Direct Internet | MPLS IPVPN PoP-to-PoP |

|---|---|---|---|

| Latency (ms) | 34.73 | 36.62 | 42 |

| Jitter (ms) | 1.19 | 2.53 | <15 (Gold) |

| Packet Received (%) | 99.21 | 99.46 |

>= 99.90% (Gold) >= 99.85% (Silver+) >= 99.40% (Silver) >= 99.00% (Bronze) |

Appendix

Extended Network Mumbai PoP

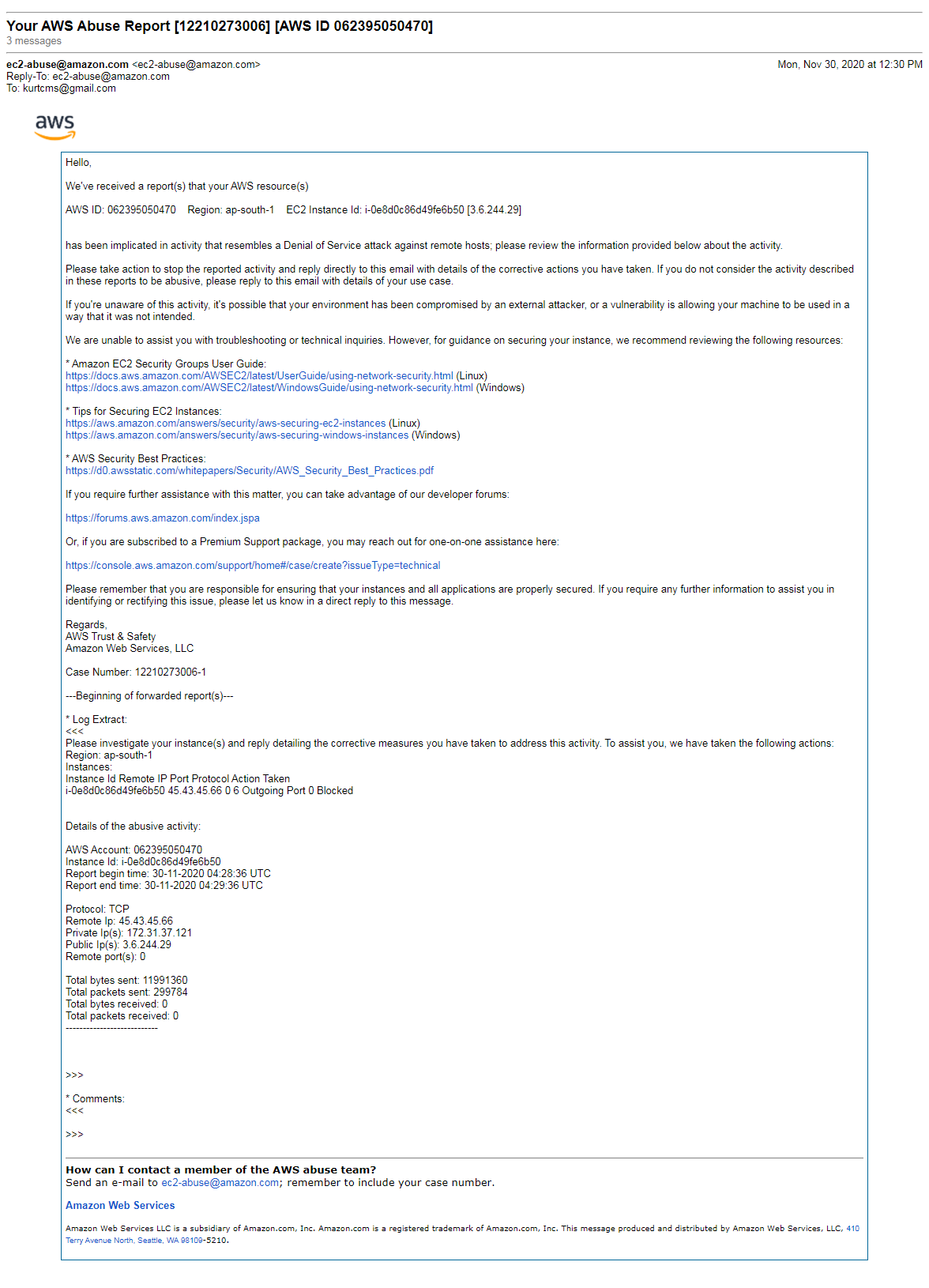

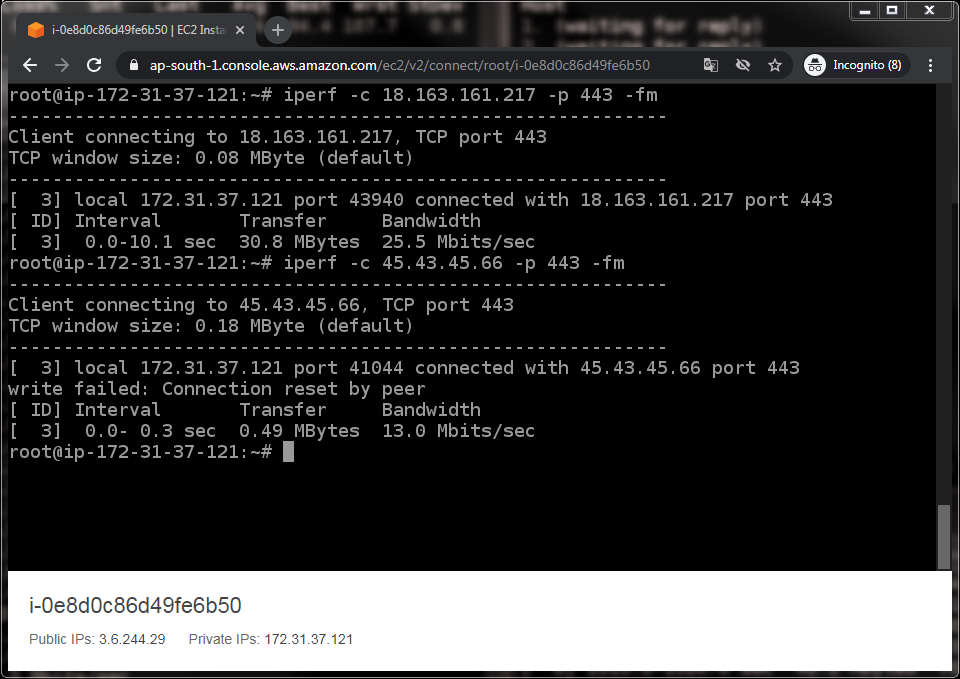

For reasons unknown with the IP address 45.43.45.66 that the extended network provided at first for the Mumbai PoP, an iPerf session on TCP 443 would terminate within milliseconds.

The extended network was notified of this issue on 45.43.45.66 and had to provide another IP address that is 45.43.45.141 for the evaluation.

vHost.vn Vietnam

At first vHost.vn Vietnam was commissioned as the cloud compute resource provider in Ho Chi Minh City for the evaluation. Preliminary testing of the vHost.vn VPS revealed however as soon as iPerf was in session despite the throughput reported was merely around 500 kilobits (kb/s) the ping latency from the vHost.vn VPS to the extended network Ho Chi Minh City PoP IP address or to the AWS Hong Kong destination would jump from 2-3 ms to upward 100ms.

Further examination revealed that the increase in latency came from the immediate first hop at 210.211.114.177 by the state-owned Viettel, the incumbent and dominant ISP in Vietnam. Route adjustment implemented by vHost.vn upon receiving a report of and subsequently acknowledging the observation resulted in no significant change.

Traffic on TCP 443 in a production network would normally be HTTPS traffic which in light of the rising trend of SaaS was becoming the majority of business critical traffic in an enterprise network. It would as such make sense for a network operator to prioritise TCP 443 traffic over the rest, or throttle traffic on other protocol and port in view of rising TCP 443 traffic, that would cause the jump in Ping latency observed. This was one of the many possible reasons that could explain for the observation yet it was by no means the only explanation. Further verification might be in order for if this was the typical behaviour of the internet in Vietnam, UDP 2426 on which the technology vendor for our Managed SD-WAN Service tunnels traffic may be severely affected.

AWS Network Stress Test Policy

AWS EC2 has a Network Stress Test Policy that all load tests, stress tests and network tests need to comply, and that prior approval from the AWS Simulated Events Team for test items outside the pre-approved scope as specified is required.

The original plan was to have a persistent TCP iPerf session that reported bandwidth measure every minute for a round-the-clock probe. Such intensive round-the-clock probing however violated the Network Stress Test policy, and triggered a Denial of Service (DoS) alert from AWS, the iPerf session was as such reduced to 2-minute each and 4 times an hour instead.